Machine Learning Research

Long Context Gets Up to Speed: AI21 Labs’ Jamba 1.5 outpaces transformers in long-text processing

A new model generates tokens faster than current transformers, especially when processing long inputs.

Machine Learning Research

A new model generates tokens faster than current transformers, especially when processing long inputs.

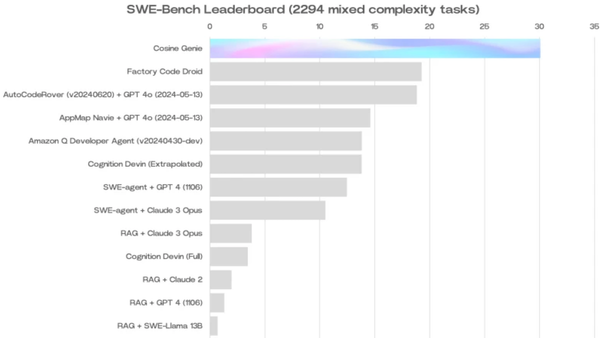

Generative AI

An agentic coding assistant boosted the state of the art in an important benchmark by more than 30 percent.

Machine Learning Research

Robots equipped with large language models are asking their human overseers for help.