Machine Learning Research

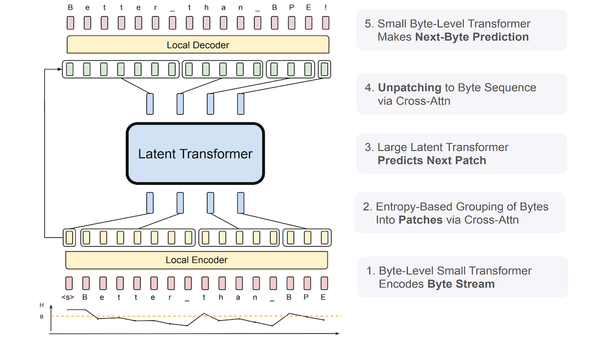

Toward LLMs That Understand Misspellings: New byte-based model beats Llama 3 on spelling, noise, and translation

Researchers built a model that’s more robust to noisy inputs like misspellings, smarter about character-level information like the number of R's in strawberry, and potentially better able to understand unfamiliar languages that might share groups of letters with familiar languages.