Machine Learning Research

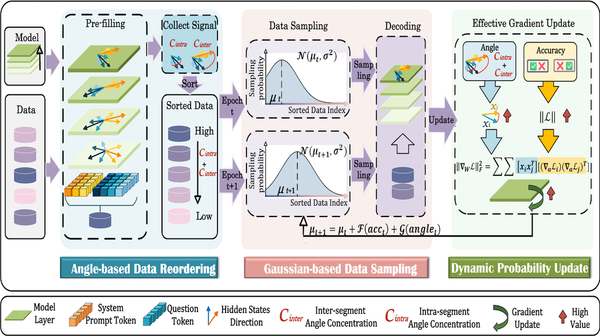

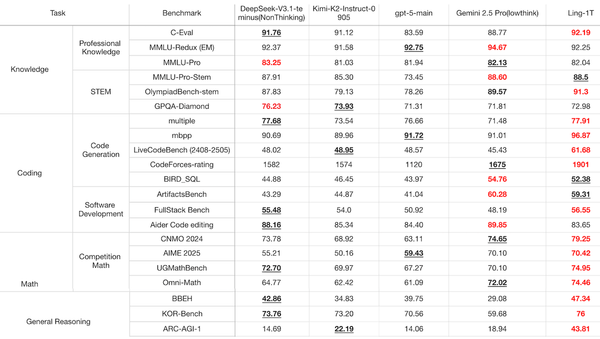

Reasoning Without “Thinking”: All about Ant Group’s Ling-1T, an open, non-reasoning model that outperforms closed competitors

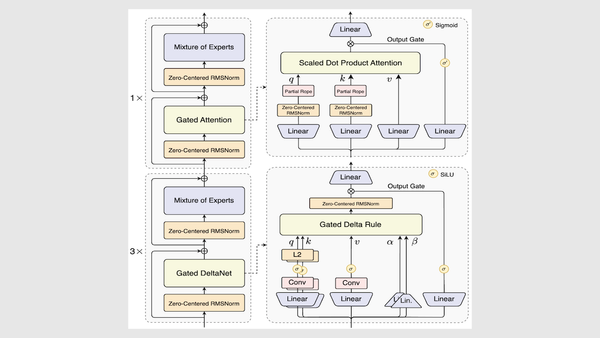

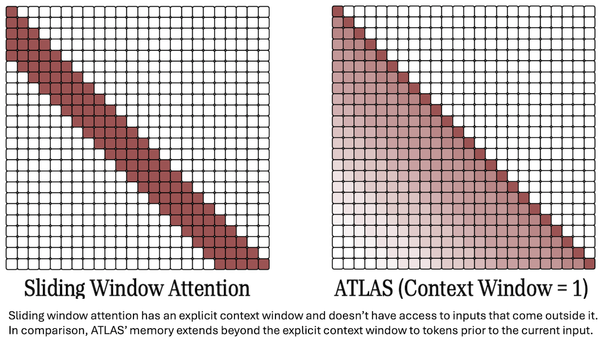

Reasoning models typically learn to undertake a separate process of “thinking” through their output of before they produce final response. Ant Group built a top non-reasoning model that can take similar steps as part of its immediate response.