Machine Learning Research

Getting the Facts Right: A memory method that reduces hallucinations in LLMs

Large language models that remember more hallucinate less.

Machine Learning Research

Large language models that remember more hallucinate less.

Machine Learning Research

OpenAI launched not only its highly anticipated o1 model but also an operating mode that enables the model to deliver higher performance — at a hefty price.

Machine Learning Research

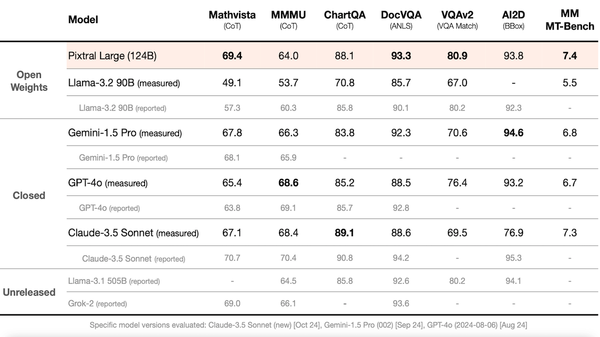

Mistral AI unveiled Pixtral Large, which rivals top models at processing combinations of text and images.

Business

One of the world’s biggest payment processors is enabling large language models to spend real money.

Business

Amazon and Anthropic expanded their partnership, potentially strengthening Amazon Web Services’ AI infrastructure and lengthening the high-flying startup’s runway.

Machine Learning Research

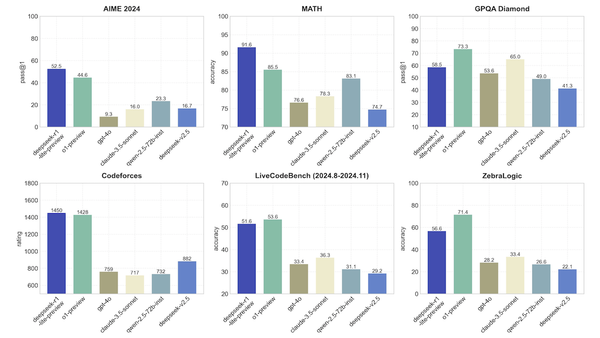

An up-and-coming Hangzhou AI lab unveiled a model that implements run-time reasoning similar to OpenAI o1 and delivers competitive performance. Unlike o1, it displays its reasoning steps.

Machine Learning Research

Researchers cut the processing required to train transformers by around 20 percent with only a slight degradation in performance.

Machine Learning Research

Builders of large AI models have relied on the idea that bigger neural networks trained on more data and given more processing power would show steady improvements. Recent developments are challenging that idea.

Machine Learning Research

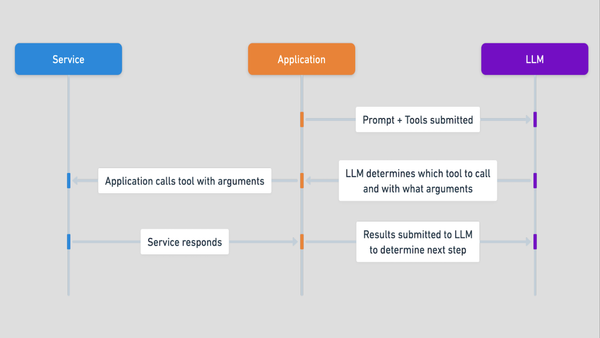

API commands for Claude Sonnet 3.5 enable Anthropic’s large language model to operate desktop apps much like humans do. Be cautious, though: It’s a work in progress.

Machine Learning Research

Mistral AI launched two models that raise the bar for language models with 8 billion or fewer parameters, small enough to run on many edge devices.

Business

Once hailed by OpenAI chief Sam Altman as the “best bromance in tech,” the partnership between Microsoft and OpenAI is facing challenges as both companies seek greater independence.

Machine Learning Research

Large language models can process small spreadsheets, but very large spreadsheets often exceed their limits for input length. Researchers devised a method that processes large spreadsheets so LLMs can answer questions about them.