Style Upgrade

Image-to-image translation, in which stylistic features from one image are imposed on the content of another to create a new picture, traditionally has been limited to translating either shapes or textures. A new network translates both.

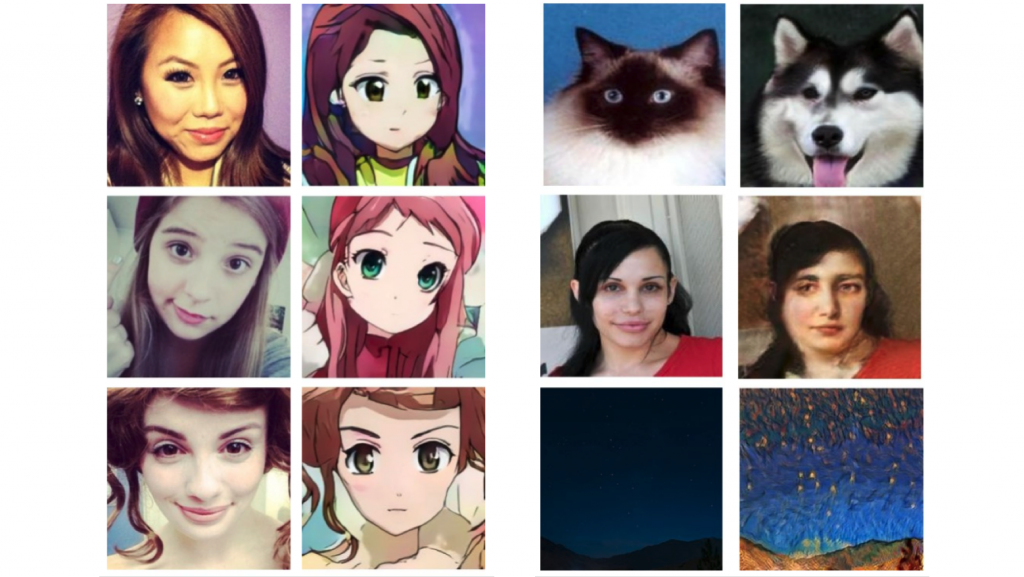

Image-to-image translation, in which stylistic features from one image are imposed on the content of another to create a new picture, traditionally has been limited to translating either shapes or textures. A new network translates both, allowing more flexible image combinations and creating more visually satisfying output.

What’s new: A team from Boeing’s South Korea lab created U-GAT-IT, a network that produces superior translations between images.

Key insights: Where earlier image-to-image translation networks work best with particular image styles, U-GAT-IT adds layers that make it useful across a variety of styles.

- Such networks typically represent shapes and textures in hidden feature maps. U-GAT-IT adds a layer that weights the importance of each feature map based on each image’s style.

- The researchers also introduce a layer that learns which normalization method works best.

How it works: U-GAT-IT uses a typical GAN architecture: A discriminator classifies images as either real or generated and a generator tries to fool the discriminator. It accepts two image inputs.

- The generator takes the images and uses a CNN to extract feature maps that encode shapes and textures.

- In earlier models, feature maps are passed directly to an attention layer that models the correspondence between pixels in each image. In U-GAT-IT, an intermediate weighting layer learns the importance of each feature map. The weights allow the system to distinguish the importance of different textures and shapes in each style.

- The weighted feature maps are passed to the attention layer to assess pixel correspondences, and the generator produces an image from there.

- The discriminator takes the first image as a real-world style example and the second as a candidate in the same style that’s either real or generated.

- Like the generator, it encodes both images to feature maps via a CNN and uses a weighting layer to guide an attention layer.

- The discriminator classifies the candidate image based on the attention layer’s output.

Results: Test subjects chose their favorite images from a selection of translations by U-GAT-IT and four earlier methods. The subjects preferred U-GAT-IT’s output by up to 73% in four out of five data sets.

Why it matters: Image-to-image translation is a hot topic with many practical applications. Professional image editors use it to boost image resolution and colorize black-and-white photos. Consumers enjoy the technology in apps like FaceApp.

We’re thinking: The best-performing deepfake networks lean heavily on image-translation techniques. A new generation that takes advantage of U-GAT-IT’s simultaneous shape-and-texture modeling may produce even more convincing fake pictures.