People With AI Friends Feel Worse: Study shows heavy use of AI companions correlates with lower emotional well-being

People who turn to chatbots for companionship show indications of lower self-reported well-being, researchers found.

People who turn to chatbots for companionship show indications of lower self-reported well-being, researchers found.

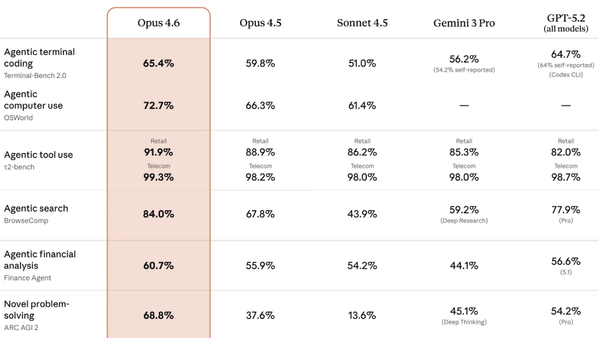

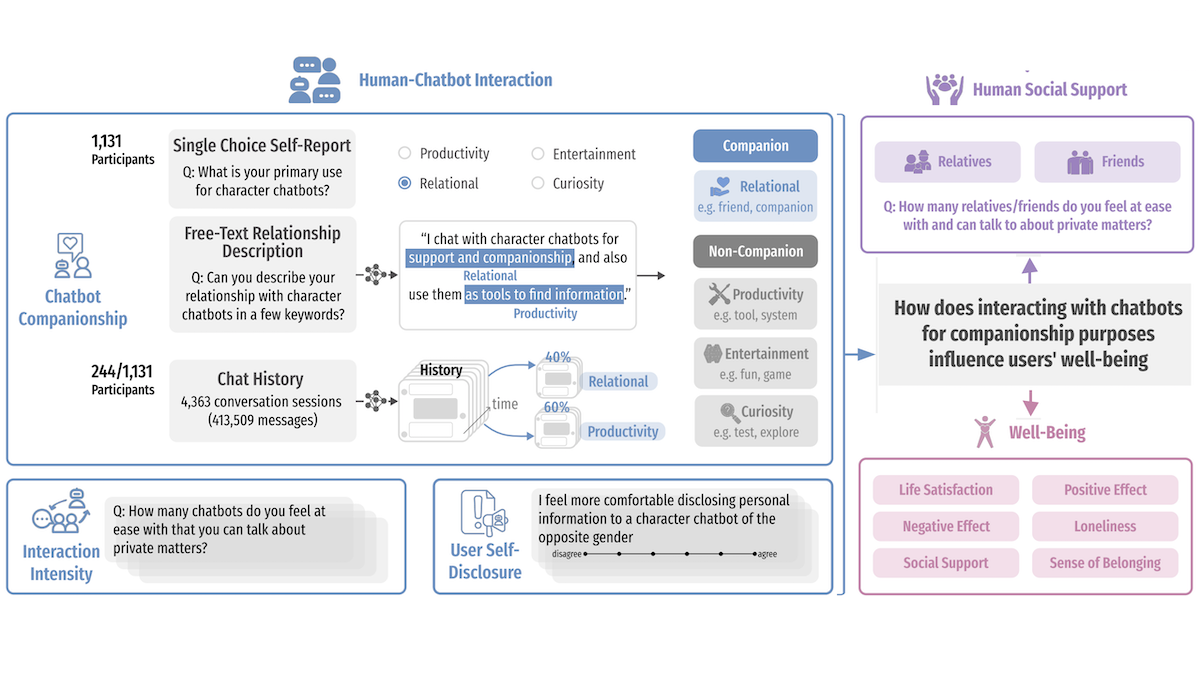

What’s new: Yutong Zhang, Dora Zhao, Jeffrey T. Hancock, and colleagues at Stanford and Carnegie Mellon examined correlations between users’ chatbot usage and psychological health. The more frequently users chatted, shared personal information, and went without human social relationships, the lower they rated their own well-being, the authors found.

Key insight: Chatbot users may not report the subject matter of their conversations accurately, but LLMs can identify and summarize topics in chat histories. This makes it possible to correlate the intensity and depth of chats with self-reported measures of well-being, such as loneliness and satisfaction.

How it works: The authors surveyed 1,131 users of the chatbot service Character.AI, which provides chatbots for purposes like roleplay, conversation, and education. In addition, they gathered 413,509 messages from 4,363 conversations with 244 participants who agreed to share their chat logs.

- The authors gauged the users’ stated motivations for using chatbots versus their likely motivations. They asked the users to select one of the following motivations: productivity, curiosity, entertainment, or companionship. They also asked them to describe freely why they used Character.AI. GPT-4o classified the descriptions of chatbot usage according to the four categories of motivation, giving the authors a more nuanced view.

- They surveyed users to measure the intensity of their chatbot interactions, including how long they conversed and how comfortable they felt disclosing sensitive personal information.

- They also surveyed users to measure their human social support, including how many close human relationships they had.

- Finally, they asked questions to measure the users’ well-being based on six factors: satisfaction, loneliness, sense of belonging, positive and negative emotions, and perceived social support.

- LLaMA-3-70B summarized conversations and fed the summaries to TopicGPT, which identified recurring themes.

Results: The authors computed correlations among the various signals and the six measures of well-being. They found that most users turned to chatbots for companionship, whether or not they selected companionship as a motivation for their chats. Furthermore, reliance on chatbots for companionship indicated lower well-being.

- 12 percent of users surveyed selected companionship as their primary reason for using Character.AI, but 51 percent described their chatbot as a friend, companion, or romantic partner. The chat logs showed much higher use of chatbots as companions: 93 percent of users had at least one conversation that showed companion-like engagement, 80 percent of chat sessions involved emotional and social support, and 68 percent involved romantic or intimate roleplay.

- Greater use of chatbots for companionship correlated with lower apparent well-being. This effect was strongest when companionship was the main reason given in the multiple-choice survey (-0.47 correlation with lower well-being, where -1 indicates the greatest correlation, 0 indicates no correlation, and 1 indicates the highest correlation with greater well-being).

Yes, but: The authors found a consistent correlation between chatbot companionship and lower well-being, but they didn’t establish causation. The data shows that people who sought companionship from chatbots likely struggled with loneliness or a lack of close social connections. It remains unclear whether loneliness caused the users to use chatbots for companionship or vice-versa, or whether using chatbots relieved or exacerbated their loneliness.

Behind the news: AI companions have been shown to bring both benefit and harm. Some studies report short-term benefits like reduced loneliness and emotional relief. Users say chatbots are nonjudgmental and easy to talk to. But other work has found emotional overdependence, distorted relationship expectations, and harmful behavior encouraged by unmoderated bots.

Why it matters: Increasingly, people converse with chatbots as an alternative to human conversation. Chatbot builders must be aware of the potential pitfalls of using their products and conduct research sufficient to enable them to build more beneficial bots. Of course, society also has a role to play by fostering social support through access to community, care infrastructure, and mental-health services.

We’re thinking: Whether it’s beneficial or not, developers are building chatbots that aim to form relationships with people. Such relationships appear to fulfill many of the same needs as human relationships, and they do so in ways that many people, for a wide variety of reasons, find more practical or comfortable. Some developers may be tempted to exploit such needs for profit, but we urge them to design apps that focus on strengthening human-to-human relationships.