Generated Data for Training Web Agents: Researchers scale up production of training data for web agents

Developing an agent that navigates the web can involve a lot of human effort spent annotating training examples to fine-tune the agent’s LLM component. Scientists automated the production of data that fine-tuned LLMs effectively for web tasks.

Developing an agent that navigates the web can involve a lot of human effort spent annotating training examples to fine-tune the agent’s LLM component. Scientists automated the production of data that fine-tuned LLMs effectively for web tasks.

What’s new: Brandon Trabucco and colleagues at Carnegie Mellon University and Amazon generated a dataset that enabled an agent based on a small model to outperform agents equipped with much larger models. The data is freely available for noncommercial and commercial uses under an MIT license.

Key insight: In a dataset for training agentic LLMs to use the web, each example typically includes a web site, task (such as comparing prices of items for sale), and a paired list of web pages (represented as markdown or screenshots) and desired actions (clicking a link, typing in a form, and so on) that complete the task. Typically, such examples are limited in the tasks and websites they illustrate. An LLM equipped with the proper tools and know-how to use a browser can build much larger and more diverse datasets automatically.

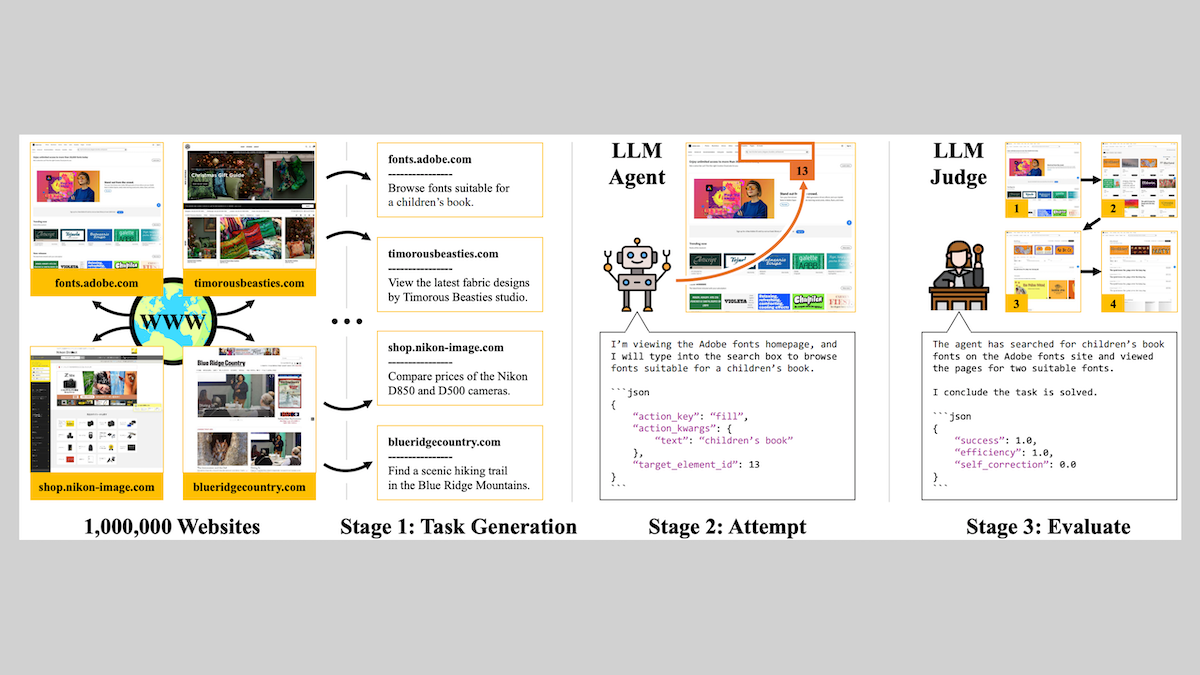

How it works: The authors built an agentic workflow that prompted Qwen3-235B and other models to produce a web-agent training dataset. From the massive web dataset Common Crawl, they selected the 1 million web sites with the highest Google PageRank.

- The dataset-builder agents identified 150,000 web sites that were accessible without registration, free of malware, and free of objectionable content.

- They generated simple tasks such as “Compare prices of the Nikon D850 and D500 cameras,” "Browse fonts suitable for a children’s book, " and "Find a scenic hiking trail in the Blue Ridge Mountains." Viable tasks were describable in up to 20 words and didn’t require logging in, modifying a web site (for instance, creating an account or post), or using other web sites.

- The agents attempted to complete each task by choosing a sequence of actions drawn from the browser automation library Playwright. Iteratively, they received web pages in which each page element had a corresponding ID (in markdown format) and generated a description of anactions to perform and the element to perform it on; for example { "action_key": "click", “target_element_id": 5 }.

- A separate copy of Qwen3 235B evaluated the generated action sequence and corresponding web pages to determine how well an agent had performed each task. It judged 10,500 tasks to have been completed successfully with 100 percent confidence.

- The authors fine-tuned Qwen3-1.7B on those examples.

Results: Using their generated training set, the authors fine-tuned a variety of models, including Qwen3-1.7B. They coupled each model — in both stock and fine-tuned versions — with an agentic framework. They asked the resulting agents complete (i) a generated test set (3,000 tasks on 3,000 web sites) and (ii) WebVoyager (643 tasks on 15 web sites). Four leading models (Qwen3-235B, Gemini 2.5 Flash, Llama 4 Maverick, and GPT 4.1 Nano) separately judged whether the agents had completed the tasks.

- The fine-tuned Qwen3-1.7B vastly outperformed its stock counterpart (11.5 percent), according to all four model judges. It achieved 56. percent versus the stock model’s 11.5 percent according to the Qwen3-235B judge.

- The fine-tuned Qwen3-1.7B fared well compared to much larger models that had not been fine-tuned, specifically Qwen3-235B, Gemini 2.5 Flash, and Llama 4 Maverick. It completed more tasks than two of the larger models, according to three out of the four judges.

- The fine-tuned Qwen3-1.7B generalized well to WebVoyager’s test set, completing more tasks than two of the larger models according to two out of the four judges.

Why it matters: Previous datasets designed to fine-tune LLMs for agentic tasks, such as WebVoyager, Mind2Web, and WebLINX, are limited to hundreds or thousands of web sites. That may not be enough to generalize reliably to a wide variety of web sites and tasks. The authors built a dataset that enables LLMs to generalize more broadly, and they shared their dataset and recipe.

We’re thinking: This work takes advantage of computer use to generate datasets that reflect the immense variety of potential web tasks. Computer use is an exciting area, but leading approaches are still unreliable. As this field progresses, we expect it to open up a huge range of applications.