The Batch Newsletter

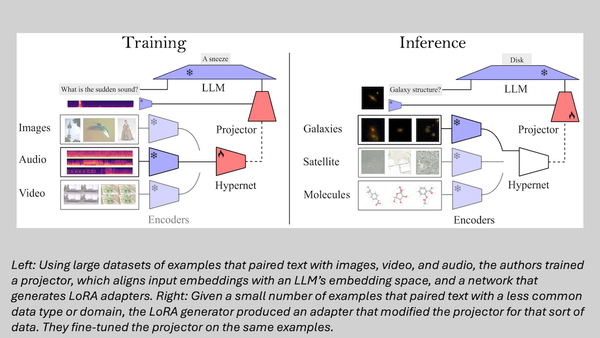

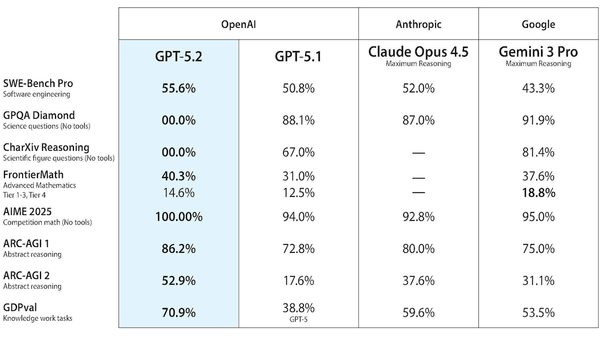

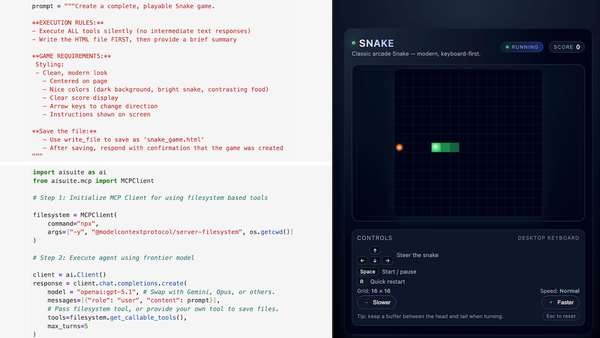

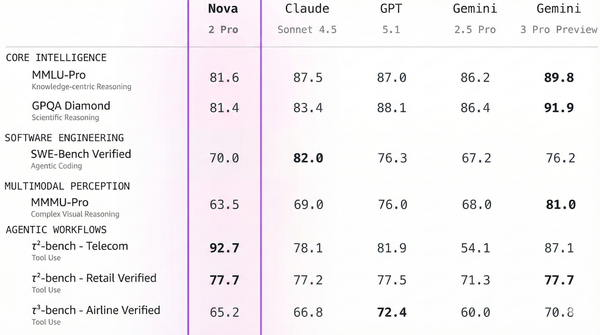

OpenAI’s Answer to Gemini 3, Runway’s Interactive Worlds, Disney’s Alliance With OpenAI, Adapting LLMs for Low-Data Domains

The Batch AI News and Insights: As amazing as LLMs are, improving their knowledge today involves a more piecemeal process than is widely appreciated.