The Year AI Went Industrial: The State of AI Report 2025 says AI’s barriers aren’t technological but social and material

A year-in-review report heralds the dawn of AI’s industrial era.

A year-in-review report heralds the dawn of AI’s industrial era.

What’s new: The eighth annual State of AI Report 2025 aims to reflect the trajectory of AI through a selection of significant work from the past 12 months. It declares 2025 to be the beginning of the industrial age of AI, noting that the barriers to the technology’s economic potential have shifted from technical limitations to matters of capital, politics, and physics. Nathan Benaich, a venture investor, led the effort and acknowledges unspecified conflicts of interest.

How it works: The sprawling 300-slide deck highlights the year’s progress in research, industry, politics, and security.

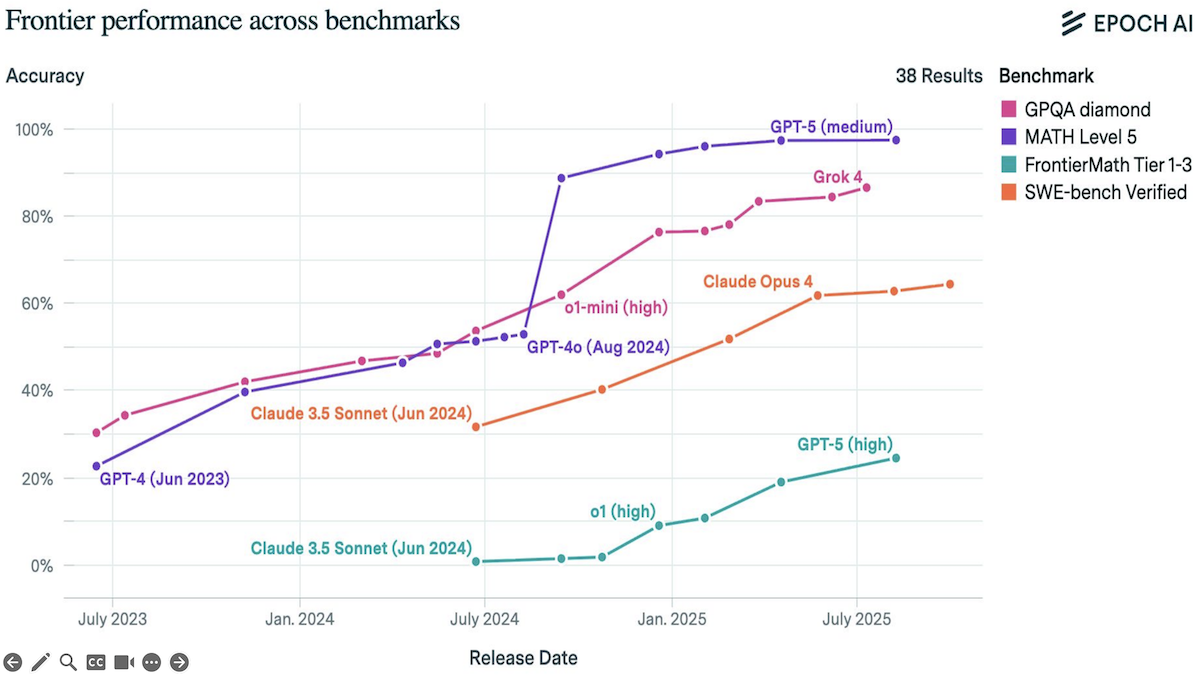

Research: Introduced late last year, reasoning models have redefined the capabilities of large language models. OpenAI’s closed models retained their lead despite strong progress among open-weights competitors, especially China-based developers DeepSeek, Alibaba, and Moonshot. Such models showed significant gains in efficiency, shrinking numbers of trainable parameters by as much as 50 times while maintaining high performance. Models from OpenAI, Google, and Harmonic achieved gold-level performance on problems from the International Mathematical Olympiad, and the medical dialog model AIME outperformed unassisted doctors in diagnostic accuracy.

Industry: Demand for AI services mounted. According to Ramp Business Corporation, which maintains an index of AI adoption by U.S. companies, 44 percent of U.S. companies pay for AI tools, up from 5 percent in 2023. A cohort of 16 companies made nearly $18.5 billion in annualized revenue as of August, demonstrating a business case that gave some confidence to extend their financial commitments into hundreds of billions of dollars. Anticipating further growth, OpenAI and others committed to hundreds of billions of dollars to build data centers, and the availability of electrical power to drive such facilities emerged as a major issue that will shape the path forward. Among providers of closed models, OpenAI led not only in capability but also in price: GPT-5 costs 12 times less than Anthropic Claude Opus for roughly comparable performance.

Politics: National regulators in Europe and the U.S. backed off as they faced the prospect that overregulation might stymie AI’s potential to drive economic growth. OpenAI, Meta, Google, and others lobbied to pre-empt state-level laws even as California forged ahead with its own legislation, which Anthropic supported. Internationally, the race to advance AI technology intensified. The U.S. launched an America-first AI strategy, blocking U.S. AI technologies from rivals, distributing it to allies, expediting permits for data-center sites, and providing the sites themselves. China responded by accelerating its efforts to build its domestic AI industry, and Chinese companies displaced Meta as premier suppliers of open-weights models.

Security: Cybersecurity concerns rose as one analysis estimated that offensive capabilities are doubling every 5 months. Criminals successfully used Claude Code to create false identities that gained remote employment at Fortune 500 companies, and researchers demonstrated that it’s possible to disable safety guardrails of open-weights models using minimal processing power. Anthropic and OpenAI responded to concerns that their models might be used to develop biological or chemical weapons by adopting preemptive safety measures.

Why it matters: State of AI Report 2025 brings into focus notable trends in AI over the past year and presents them with detailed context and evidence. It’s chock-full of information that weaves diverse threads into coherent lines of progress. Moreover, it provides a consistent perspective on outstanding developments from year to year.

We’re thinking: By the authors’ own reckoning, half of their 2024 predictions came to pass (more or less). This year’s predictions mostly seem like matters of course. For instance, AI agents will purchase greater than 5 percent of a major retailer’s annual online sales, a movie produced using AI will attract a large audience, and resistance to building data centers will sway U.S. state-level elections. But it also includes the alarming, and imaginable, prospect that an event driven by deepfakery or agents will trigger a NATO emergency. The need for AI practitioners to attend to ethical and security concerns is as high as ever.