Improve Agentic Performance with Evals and Error Analysis, Part 1: When AI agentic systems go astray, it’s tempting to shortcut evals and error analysis. But these processes cas lead to much faster progress.

Readers responded with both surprise and agreement last week when I wrote that the single biggest predictor of how rapidly a team makes progress building an AI agent lay in their ability to drive a disciplined process for evals...

Dear friends,

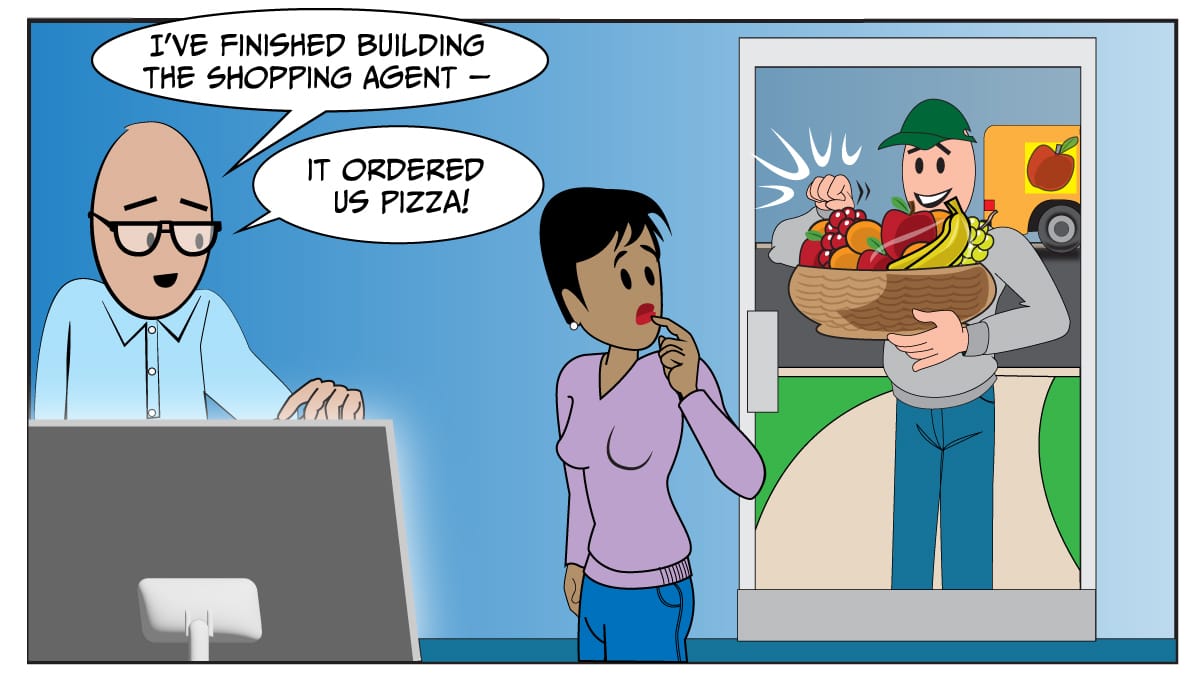

Readers responded with both surprise and agreement last week when I wrote that the single biggest predictor of how rapidly a team makes progress building an AI agent lay in their ability to drive a disciplined process for evals (measuring the system’s performance) and error analysis (identifying the causes of errors). It’s tempting to shortcut these processes and to quickly attempt fixes to mistakes rather than slowing down to identify the root causes. But evals and error analysis can lead to much faster progress. In this first of a two-part letter, I’ll share some best practices for finding and addressing issues in agentic systems.

Even though error analysis has long been an important part of building supervised learning systems, it is still underappreciated compared to, say, using the latest and buzziest tools. Identifying the root causes of particular kinds of errors might seem “boring,” but it pays off! If you are not yet persuaded that error analysis is important, permit me to point out:

- To master a composition on a musical instrument, you don’t only play the same piece from start to end. Instead, you identify where you’re stumbling and practice those parts more.

- To be healthy, you don’t just build your diet around the latest nutrition fads. You also ask your doctor about your bloodwork to see if anything is amiss. (I did this last month and am happy to report I’m in good health! 😃)

- To improve your sports team’s performance, you don’t just practice trick shots. Instead, you review game films to spot gaps and then address them.

To improve your agentic AI system, don’t just stack up the latest buzzy techniques that just went viral on social media (though I find it fun to experiment with buzzy AI techniques as much as the next person!). Instead, use error analysis to figure out where it’s falling short, and focus on that.

Before analyzing errors, we first have to decide what is an error. So the first step is to put in evals. I’ll focus on that for the remainder of this letter and discuss error analysis next week.

If you are using supervised learning to train a binary classifier, the number of ways the algorithm could make a mistake is limited. It could output 0 instead of 1, or vice versa. There are also a handful of standard metrics like accuracy, precision, recall, F1, ROC, etc. that apply to many problems. So as long as you know the test distribution, evals are relatively straightforward, and much of the work of error analysis lies in identifying what types of input an algorithm fails on, which also leads to data-centric AI techniques for acquiring more data to augment the algorithm in areas where it’s weak.

With generative AI, a lot of intuitions from evals and error analysis of supervised learning carry over — history doesn’t repeat itself, but it rhymes — and developers who are already familiar with machine learning and deep learning often adapt to generative AI faster than people who are starting from scratch. But one new challenge is that the space of outputs is much richer, so there are many more ways an algorithm’s output might be wrong.

Take the example of automated processing of financial invoices where we use an agentic workflow to populate a financial database with information from received invoices. Will the algorithm incorrectly extract the invoice due date? Or the final amount? Or mistake the payer address for the biller address? Or get the financial currency wrong? Or make the wrong API call so the verification process fails? Because the output space is much larger, the number of failure modes is also much larger.

Rather than defining an error metric ahead of time, it is therefore typically more effective to first quickly build a prototype, then manually examine a handful of agent outputs to see where it performs well and where it stumbles. This allows you to focus on building datasets and error metrics — sometimes objective metrics implemented in code, and sometimes subjective metrics using LLM-as-judge — to check the system’s performance in the dimensions you are most concerned about. In supervised learning, we sometimes tune the error metric to better reflect what humans care about. With agentic workflows, I find tuning evals to be even more iterative, with more frequent tweaks to the evals to capture the wider range of things that can go wrong.

I discuss this and other best practices in detail in Module 4 of the Agentic AI course we announced last week. After building evals, you now have a measurement of your system’s performance, which provides a foundation for trying different modifications to your agent, as you can now measure what makes a difference. The next step is then to perform error analysis to pinpoint what changes to focus your development efforts on. I’ll discuss this further next week.

Keep building!

Andrew