Trump Resets AI Policy, Qwen3’s Agentic Advance, U.S. Chips for China, The Trouble With AI Friends

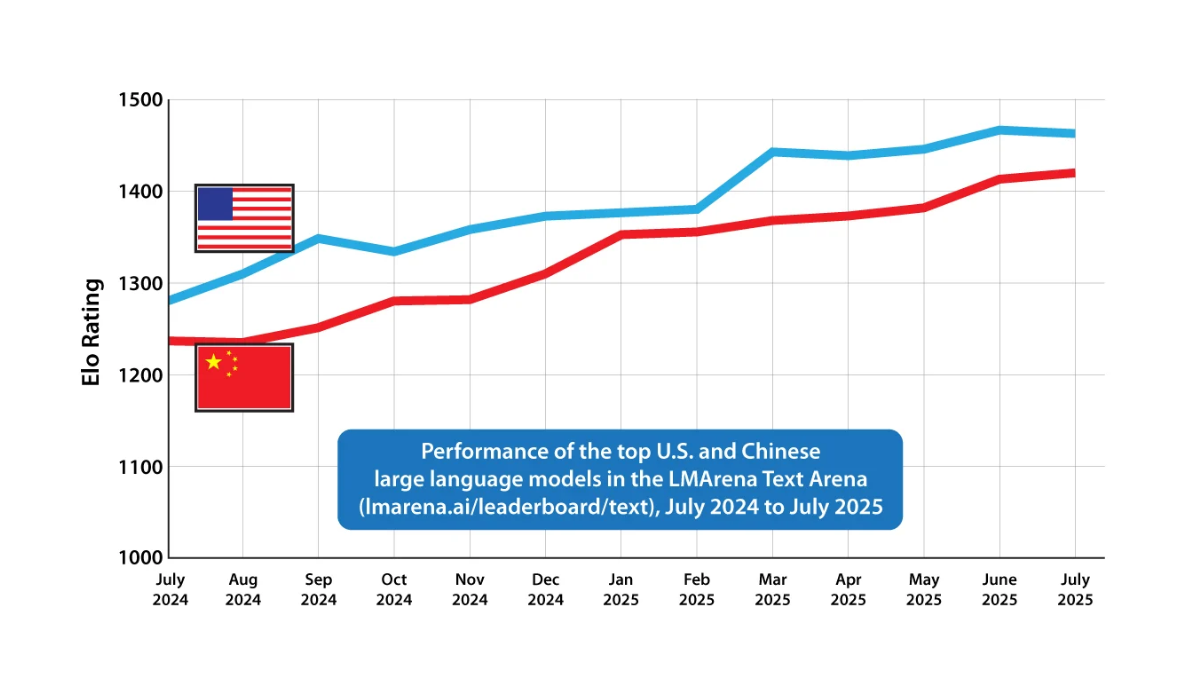

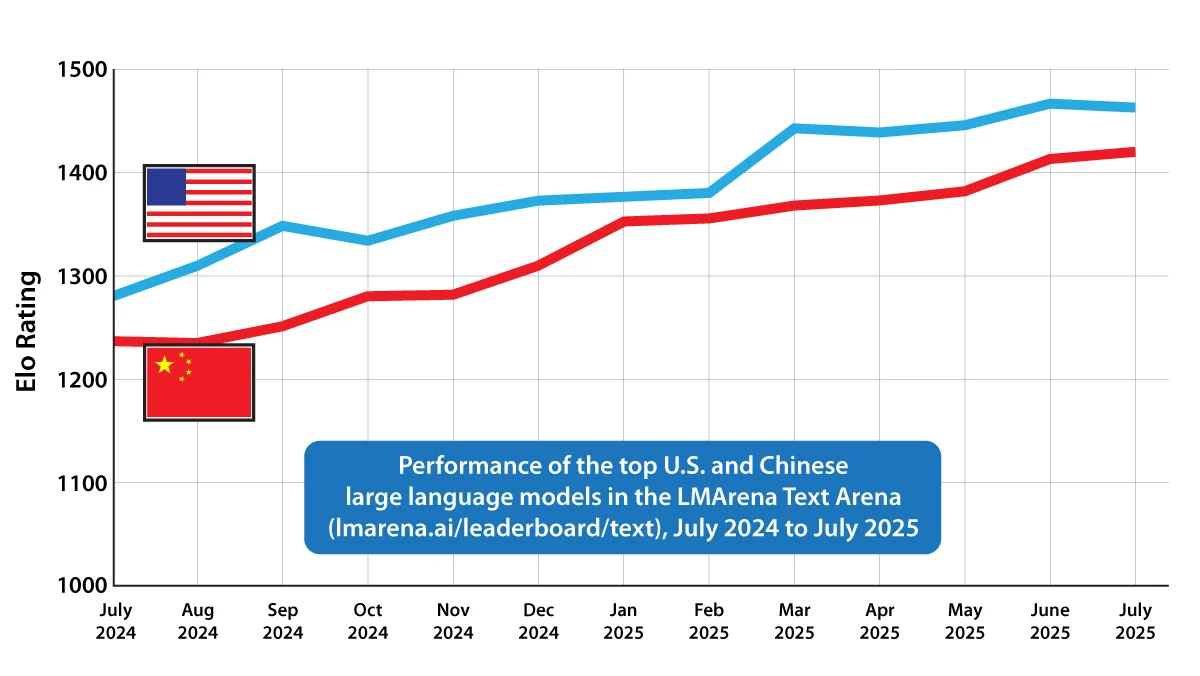

The Batch AI News and Insights: There is now a path for China to surpass the U.S. in AI. Even though the U.S. is still ahead, China has tremendous momentum with its vibrant open-weights model ecosystem and aggressive moves in semiconductor design and manufacturing.

Dear friends,

There is now a path for China to surpass the U.S. in AI. Even though the U.S. is still ahead, China has tremendous momentum with its vibrant open-weights model ecosystem and aggressive moves in semiconductor design and manufacturing. In the startup world, we know momentum matters: Even if a company is small today, a high rate of growth compounded for a few years quickly becomes an unstoppable force. This is why a small, scrappy team with high growth can threaten even behemoths. While both the U.S. and China are behemoths, China’s hypercompetitive business landscape and rapid diffusion of knowledge give it tremendous momentum. The White House’s AI Action Plan released last week, which explicitly champions open source (among other things), is a very positive step for the U.S., but by itself it won’t be sufficient to sustain the U.S. lead.

Now, AI isn’t a single, monolithic technology, and different countries are ahead in different areas. For example, even before Generative AI, the U.S. had long been ahead in scaled cloud AI implementations, while China has long been ahead in surveillance technology. These translate to different advantages in economic growth as well as both soft and hard power. Even though nontechnical pundits talk about “the race to AGI” as if AGI were a discrete technology to be invented, the reality is that AI technology will progress continuously, and there is no single finish line. If a company or nation declares that it has achieved AGI, I expect that declaration to be less a technology milestone than a marketing milestone. A slight speed advantage in the Olympic 100m dash translates to a dramatic difference between winning a gold medal versus a silver medal. An advantage in AI prowess translates into a proportionate advantage in economic growth and national power; while the impact won’t be a binary one of either winning or losing everything, these advantages nonetheless matter.

Looking at Artificial Analysis and LMArena leaderboards, the top proprietary models were developed in the U.S., but the top open models come from China. Google’s Gemini 2.5 Pro, OpenAI’s o4, Anthropic’s Claude 4 Opus, and Grok 4 are all strong models. But open alternatives from China such as DeepSeek R1-0528, Kimi K2 (designed for agentic reasoning), Qwen3 variations (including Qwen3-Coder, which is strong at coding) and Zhipu’s GLM 4.5 (whose post-training software was released as open source) are close behind, and many are ahead of Google’s Gemma 3 and Meta’s Llama 4 — the U.S.’ best open-weights offerings.

Because many U.S. companies have taken a secretive approach to developing foundation models — a reasonable business strategy — the leading companies spend huge numbers of dollars to recruit key team members from each other who might know the “secret sauce“ that enabled a competitor to develop certain capabilities. So knowledge does circulate, but at high cost and slowly. In contrast, in China’s open AI ecosystem, many advanced foundation model companies undercut each other on pricing, make bold PR announcements, and poach each others’ employees and customers. This Darwinian life-or-death struggle will lead to the demise of many of the existing players, but the intense competition breeds strong companies.

In semiconductors, too, China is making progress. Huawei’s CloudMatrix 384 aims to compete with Nvidia’s GB200 high-performance computing system. While China has struggled to develop GPUs with a similar capability as Nvidia’s top-of-the-line B200, Huawei is trying to build a competitive system by combining a larger number (384 instead of 72) of lower-capability chips. China’s automotive sector once struggled to compete with U.S. and European internal combustion engine vehicles, but leapfrogged ahead by betting on electric vehicles. It remains to be seen how effective Huawei’s alternative architectures prove to be, but the U.S. export restrictions have given Huawei and other Chinese businesses a strong incentive to invest heavily in developing their own technology. Further, if China were to develop its domestic semiconductor manufacturing capabilities while the U.S. remained reliant on TSMC in Taiwan, then the U.S.’ AI roadmap would be much more vulnerable to a disruption of the Taiwan supply chain (perhaps due to a blockade or, worse, a hot war).

With the rise of electricity, the internet, and other general-purpose technologies, there was room for many nations to benefit, and the benefit to one nation hasn’t come at the expense of another. I know of businesses that, many months back, planned for a future in which China dominates open models (indeed, we are there at this moment, although the future depends on our actions). Given the transformative impact of AI, I hope all nations — especially democracies with a strong respect for human rights and the rule of law — will clear roadblocks from AI progress and invest in open science and technology to increase the odds that this technology will support democracy and benefit the greatest possible number of people.

Keep building!

Andrew

A MESSAGE FROM DEEPLEARNING.AI

Build AI applications that access tools, data, and prompt templates using Model Context Protocol (MCP), an open standard developed by Anthropic. In “MCP: Build Rich-Context AI Apps with Anthropic,” you’ll build and deploy an MCP server, make an MCP-compatible chatbot, and connect applications to multiple third-party servers. Sign up now

News

White House Resets U.S. AI Policy

President Trump set forth principles of an aggressive national AI policy, and he moved to implement them through an action plan and executive orders.

What’s new: In “Winning the Race: America’s AI Action Plan,” the White House outlines a trio of near-term goals for AI in the United States: (i) stimulate innovation, (ii) build infrastructure, and (iii) establish global leadership. As initial steps in these directions, the president directed the federal government to (a) procure only “ideologically neutral” AI models, (b) accelerate permitting of data-center construction, and (c) promote exports of AI technology.

How it works: Rather than advocating for legislation or legal challenges, the plan focuses on actions the executive branch of government can take on its own. President Trump had ordered technology advisor Michael Kratsios, AI advisor David Sacks, and national security advisor Marco Rubio to make a plan to “sustain and enhance America’s global AI dominance” within days of starting his current term. Senior policy advisors Dean Ball and Sriram Krishnan, among others, also played key roles.

- Stimulate innovation: The plan would support open-source and open-weights software by boosting U.S. developers’ access to processing power and driving adoption of open models by small and medium-size businesses. It calls for the U.S. to build scientific datasets; invest in interpretability, control, robustness, and evaluations; and promote AI in defense applications. In addition, the federal government will support the development of AI skills in its funding of education and workforce training. Moreover, in a speech, Trump said he wants AI companies to be allowed to use copyrighted works freely to train models.

- Build AI Infrastructure: The plan aims to accelerate the building of data centers, semiconductor manufacturing plants, and energy infrastructure. To this end, the federal government will create exemptions to environmental laws, accelerate approvals, and make federal lands available.

- Strengthen global competitiveness: The plan provides for strengthening AI-related export controls, countering the influence of China, and promoting U.S. values in international agreements regarding sensitive technologies such as face recognition. The federal government will coordinate overseas sales of U.S.-made hardware, models, software tools, applications, and standards. To avoid subjecting U.S. companies to a variety of state laws, it will withhold funding from states that pass AI regulations the administration considers burdensome.

Behind the news: In contrast to President Trump’s emphasis on U.S. dominance in AI, the previous Biden administration focused on limiting perceived risks.

- In 2023, the Biden administration issued executive orders that required developers to notify government regulators when they built a model that would pose a risk to national security. It advocated legislation that aimed to protect user privacy and prevent AI from discriminating against protected groups.

- Biden limited exports of U.S. chips and chip-making technology to numerous countries, notably China but also U.S. allies such as India and Singapore. Trump similarly banned chip sales to China, but reversed course in mid-July and pledged to allow Nvidia and AMD to sell advanced chips to China.

Why it matters: The Trump administration’s action plan sets the stage for U.S. AI developers to do their best work and share their accomplishments with the world. It aims to avoid the European Union’s risk-averse regulatory approach and counter China’s rising power and influence in AI development. To those ends, it prioritizes a unified national AI policy, streamlines the building of infrastructure, facilitates distributing models and hardware abroad, supports the development of datasets and open-source models, and refrains from defining the arbitrary thresholds of theoretical risk.

We’re thinking: This plan is a positive step toward giving the U.S. the infrastructure, global reach, and freedom from bureaucratic burdens that it needs to continue — and possibly accelerate — the rapid pace of innovation. However, the executive order in support of models that are “objective and free from top-down ideological bias” is wrong-headed. The president complains that some AI models are “woke,” and he wants to discourage references to climate change, diversity, and misinformation. But putting those requirements into an executive order, even if it clears some roadblocks to AI development, risks emphasizing some of Trump’s own ideological preferences.

Qwen3’s Agentic Advance

Less than two weeks after Moonshot’s Kimi K2 bested other open-weights, non-reasoning models in tests related to agentic behavior, Alibaba raised the bar yet again.

What’s new: Alibaba released the weights for three new large language models based on its earlier Qwen3-235B-A22B. It updated the earlier model (designating the update 2507), divided it into non-reasoning and reasoning variants, and added Qwen3-Coder for coding and multi-turn tool use.

- Input/output: Qwen3-235B-A22B-Instruct-2507 and Qwen3-235B-A22B-Thinking-2507: Text in (up to 262,144 tokens), text out (adjustable, up to 32,768 tokens recommended. Qwen3-Coder: Text in (up to 1 million tokens), text out (adjustable, up to 32,768 tokens recommended).

- Architecture: Mixture-of-experts transformers. Qwen3-235B-A22B-Instruct-2507 and Qwen3-235B-A22B-Thinking-2507: 235 billion parameters, 22 billion active at any given time. Qwen3-Coder: 480 billion parameters, 35 billion active at any given time.

- Performance: Qwen3-235B-A22B-Instruct-2507: best among non-reasoning models on most benchmarks reported. Qwen3-235B-A22B-Thinking-2507: middling performance compared to proprietary reasoning models. Qwen3-Coder: best among coding models on most benchmarks reported

- Availability: Free for noncommercial and commercial uses under Apache 2.0 license via HuggingFace and ModelScope, API access via Alibaba Cloud.

- API Price: Qwen3-235B-A22B-Instruct-2507: $0.70/$2.8 per million input/output tokens. Qwen3-235B-A22B-Thinking-2507: $0.70/$8.4 per 1 million input/output tokens. Qwen3-Coder: $1 to $6 per 1 million input tokens, $5 to $60 per 1 million output tokens depending on the number of input tokens.

- Undisclosed: Qwen3-235B-A22B-Instruct-2507 and Qwen3-235B-A22B-Thinking-2507: updated training data and methods. Qwen3-Coder: training data and methods.

How it works: The updated Qwen3 models underwent pretraining and reinforcement learning (RL) phases, but the company has not yet published details. During RL, the team used a modified version of Group Relative Policy Optimization (GRPO) that it calls Group Sequence Policy Optimization (GSPO).

- Qwen3-235B-A22B-Instruct-2507 and Qwen3-235B-A22B-Thinking-2507: The team removed the switch that previously enabled or disabled reasoning. Instead, users can choose whether to use the nonreasoning or reasoning model. Both models process input sizes up to double that of the previous version.

- Qwen3-Coder: The team pretrained Qwen3-Coder on 7.5 trillion tokens, 70 percent of which were code. During RL, Qwen3-Coder learned to solve tasks that required multiple turns of tool use.

Performance: The authors compared Qwen3-235B-A22B-Instruct-2507 and Qwen3-235B-A22B-Thinking-2507 to both open and proprietary models across tasks that involved knowledge, reasoning, coding, and tool use. They compared Qwen3-Coder to open and proprietary models on agentic tasks (coding, tool use, and browser use).

- Qwen3-235B-A22B-Instruct-2507 achieved the best performance on 14 of 25 benchmarks tested compared to other non-reasoning models, including Kimi K2, Claude Opus 4 (with reasoning mode turned off), and GPT-4o. It did especially well on knowledge and reasoning tasks. For example, on GPQA (graduate-level science questions), Qwen3-235B-A22B-Instruct-2507 (77.5 percent accuracy) outperformed second-best Kimi K2 (75.1 percent accuracy).

- Qwen3-235B-A22B-Thinking-2507 achieved the best performance on 7 of 23 benchmarks compared to other reasoning models, often behind o3 and Gemini-2.5 Pro and ahead of Claud 4 Opus with thinking mode turned on. For instance, on GPQA, Qwen3-235B-A22B-Thinking-2507 (81.1 percent accuracy) fell behind Gemini 2.5 Pro (86.4 percent) and o3 (83.3 percent) but ahead of Claude 4 Opus (79.6 percent).

- Qwen3-Coder outperformed open-weights models Kimi K2 Instruct and DeepSeek-V3 on all 13 benchmarks presented that involve agentic capabilities like multi-turn coding and agentic workflows. Compared to Claude 4 Sonnet, it achieved better performance on 6 of 13. For instance, on SWE-bench Verified (software engineering tasks), the authors compared the models using the OpenHands agentic framework for 100 turns. Qwen3-Coder succeeded 67 percent of the time, while Kimi K2 Instruct succeeded 65.4 percent of the time and Claude Sonnet 4 succeeded 68 percent of the time.

Why it matters: Developers of open-weights models are adjusting their approaches to emphasize performance in agentic tasks (primarily involving coding and tool use). These models open doors to a vast range of applications that, given a task, can plan an appropriate series of actions and interact with other computer systems to execute them. That the first wave of such models were built by teams in China is significant: U.S. developers like Anthropic, Google, and OpenAI continue to lead the way with proprietary models, but China’s open-weights community is hot on their heels, while the U.S. open-weights champion, Meta, may step away from this role.

We’re thinking: Agentic performance is driving the next wave of AI progress. We hope to learn more about how the Qwen team raised the bar.

U.S. Lifts Ban on AI Chips for China

Nvidia will resume sales of H20 processors in China.

What’s new: Nvidia and AMD said they’ll resume supplying to China graphics processing units (GPUs) tailored to comply with U.S. export restrictions, including Nvidia’s H20 and AMD’s MI308, after the Trump administration, which had blocked the sales, assured the companies it now would allow them.

How it works: In April, the White House announced that shipments to China of Nvidia H20s, AMD MI308s, and equivalent chips would require export licenses, which apparently would not be forthcoming. That requirement effectively shut both companies out of China, which in 2024 accounted for 13 percent of Nvidia’s revenue and 24 percent of AMD’s. The White House’s decision to grant the licenses follows months of lobbying by Nvidia CEO Jensen Huang.

- Huang met with Trump in the Oval Office, built relationships with key White House officials, and attended a $1-million-a-seat dinner for a chance to speak with the president, The New York Times reported.

- Huang told Trump the H20 was inferior to the company’s top-of-the-line processors. He argued that the bans prevented U.S. chipmakers from competing in a critical market and assisted Chinese competitors by shutting out Nvidia, which sells more than 90 percent of GPUs globally. In addition, he agreed to spend $500 billion to fabricate GPUs in the U.S. rather than Taiwan, where they are currently manufactured.

- The White House said it relaxed restrictions on chip sales to China in part because China eased limits on shipments of rare-earth permanent magnets, which are critical to defense, automotive, and technology companies, to the U.S.

- Nvidia told customers in China that it would initially struggle to meet demand for the H20 due to limited supply, The Information reported.

Behind the news: U.S. lawmakers of both major parties aim to protect U.S. economic interests and prevent China from using advanced chip technology for military applications.

- In 2022, the Biden administration restricted exports to China of some advanced AI chips. Exports were tightened further in 2023, 2024, and by President Trump this year.

- Nvidia designed the H20 to comply with the Biden-era restrictions. Launched in 2024, the H20 provides 28 percent less processing power than the H100, Nvidia’s top of the line at the time, but more memory and memory bandwidth. The balance between downgrade and upgrade has led some analysts to question whether the H20 is actually hobbled for many purposes.

- The restrictions have met with mixed results. Chinese companies have acquired top-of-the-line chips on the black market or paid for cloud-computing access to chips located in countries where they’re available without violating U.S. export controls.

Why it matters: AI presents geopolitical opportunities for technological and economic dominance as well as challenges to military power. The U.S. export restrictions are intended to balance these elements, yet they have been largely ineffective so far. This year, DeepSeek developed DeepSeek-R1, which delivers high performance for a low development cost. H20s were among the hardware used to train that model, TechCrunch reported. Alibaba, Moonshot, Tencent, and other Chinese companies also have produced high-performance foundation models, while China has accelerated its own semiconductor industry to avoid relying on US suppliers. Relaxing the restrictions may balance U.S. interests more effectively.

We’re thinking: Ensuring national security is crucial, but so is enabling the free flow of ideas and innovation. We applaud the relaxation of trade restrictions and look forward to further contributions by developers in China and around the world.

People With AI Friends Feel Worse

People who turn to chatbots for companionship show indications of lower self-reported well-being, researchers found.

What’s new: Yutong Zhang, Dora Zhao, Jeffrey T. Hancock, and colleagues at Stanford and Carnegie Mellon examined correlations between users’ chatbot usage and psychological health. The more frequently users chatted, shared personal information, and went without human social relationships, the lower they rated their own well-being, the authors found.

Key insight: Chatbot users may not report the subject matter of their conversations accurately, but LLMs can identify and summarize topics in chat histories. This makes it possible to correlate the intensity and depth of chats with self-reported measures of well-being, such as loneliness and satisfaction.

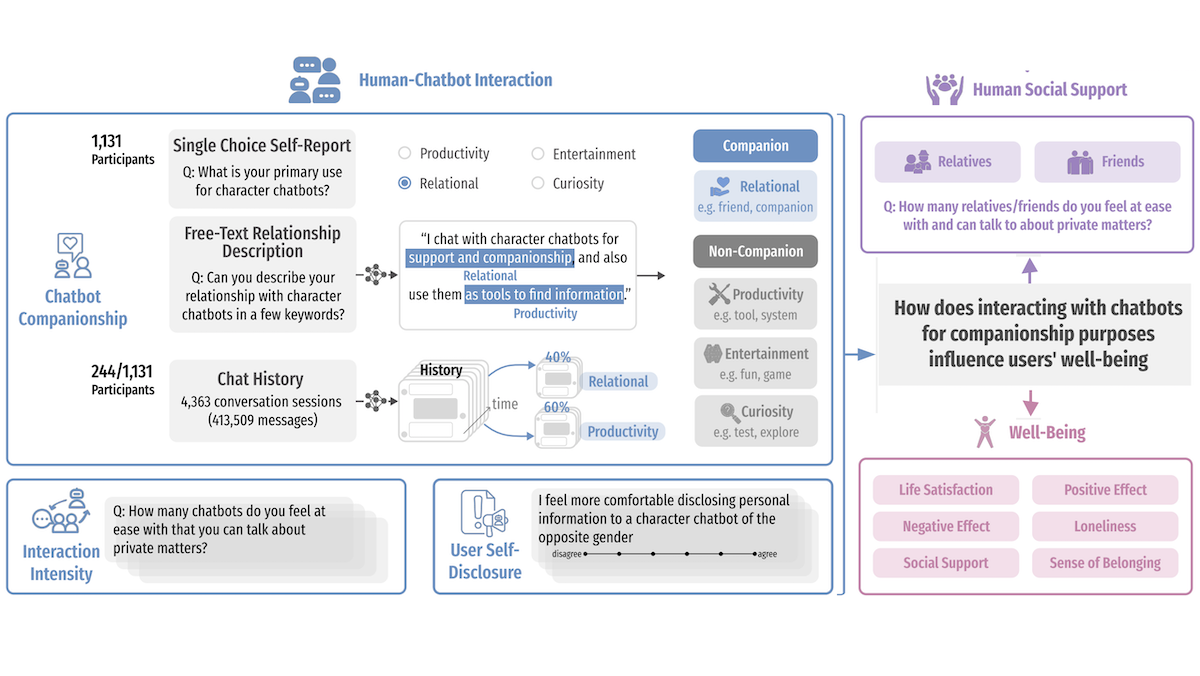

How it works: The authors surveyed 1,131 users of the chatbot service Character.AI, which provides chatbots for purposes like roleplay, conversation, and education. In addition, they gathered 413,509 messages from 4,363 conversations with 244 participants who agreed to share their chat logs.

- The authors gauged the users’ stated motivations for using chatbots versus their likely motivations. They asked the users to select one of the following motivations: productivity, curiosity, entertainment, or companionship. They also asked them to describe freely why they used Character.AI. GPT-4o classified the descriptions of chatbot usage according to the four categories of motivation, giving the authors a more nuanced view.

- They surveyed users to measure the intensity of their chatbot interactions, including how many chatbots they conversed with, how long they conversed, and how comfortable they felt disclosing sensitive personal information.

- They also surveyed users to measure their human social support, including how many close relationships they had with friends and relatives.

- Finally, they asked questions to measure the users’ well-being based on six factors: satisfaction, loneliness, sense of belonging, positive and negative emotions, and perceived social support.

- LLaMA-3-70B summarized conversations and fed the summaries to TopicGPT, which identified recurring themes.

Results: The authors computed correlations among the various signals and the six measures of well-being. They found that most users turned to chatbots for companionship, whether or not they selected companionship as a motivation for their chats. Furthermore, reliance on chatbots for companionship indicated lower well-being.

- 12 percent of users surveyed selected companionship as their primary reason for using Character.AI, but 51 percent described their chatbot as a friend, companion, or romantic partner. The chat logs showed much higher use of chatbots as companions: 93 percent of users had at least one conversation that showed companion-like engagement, 80 percent of chat sessions involved emotional and social support, and 68 percent involved romantic or intimate roleplay.

- Greater use of chatbots for companionship correlated with lower apparent well-being. This effect was strongest when companionship was the main reason given in the multiple-choice survey (-0.47 correlation with lower well-being, where -1 indicates the greatest correlation, 0 indicates no correlation, and 1 indicates the highest correlation with greater well-being).

Yes, but: The authors found a consistent correlation between chatbot companionship and lower well-being, but they didn’t establish causation. The data shows that people who sought companionship from chatbots likely struggled with loneliness or a lack of close social connections. It remains unclear whether loneliness caused the users to use chatbots for companionship or vice-versa, or whether using chatbots relieved or exacerbated their loneliness.

Behind the news: AI companions have been shown to bring both benefit and harm. Some studies report short-term benefits like reduced loneliness and emotional relief. Users say chatbots are nonjudgmental and easy to talk to. But other work has found emotional overdependence, distorted relationship expectations, and harmful behavior encouraged by unmoderated bots.

Why it matters: Increasingly, people converse with chatbots as an alternative to human conversation. Chatbot builders must be aware of the potential pitfalls of using their products and conduct research sufficient to enable them to build more beneficial bots. Of course, society also has a role to play by fostering social support through access to community, care infrastructure, and mental-health services.

We’re thinking: Whether it’s beneficial or not, developers are building chatbots that aim to form relationships with people. Such relationships appear to fulfill many of the same needs as human relationships, and they do so in ways that many people, for a wide variety of reasons, find more practical or comfortable. Some developers may be tempted to exploit such needs for profit, but we urge them to design apps that focus on strengthening human-to-human relationships.