More Robust Multi-Agent Systems: Researchers improve multi-agent systems by studying how they tend to fail

Researchers addressed weaknesses in existing multi-agent frameworks. Their systems achieved scientific and technical breakthroughs.

Researchers addressed weaknesses in existing multi-agent frameworks. Their systems achieved scientific and technical breakthroughs.

What’s new: Mert Cemri and colleagues at UC Berkeley and the Italian bank Intesa Sanpaolo examined ways in which multi-agent LLM systems tend to fail. They explored possible fixes and built more robust multi-agent systems that, for instance, improved Google’s own processing infrastructure.

Key insight: Multi-agent systems often are modeled after human organizations, so their failure modes can mirror those of human organizations. For instance, people in organizations may fail to seek clarification for tasks they don’t understand well. AI builders can address similar issues among agents by, say, forcing them to ask for clarification if their confidence falls below a threshold. Other strategies include strengthening verification that an agent completed its task, standardizing protocols for inter-agent communication, and improving descriptions of agents’ roles.

How it works: The authors fed queries from existing software-engineering and math-problem datasets to open-source, multi-agent frameworks including AG2 (disclosure: Andrew Ng has a personal investment in AG2) and ChatDev, using GPT-4o as the LLM component. They collected all model and tool outputs for more than 150 failed attempts. Annotators classified failures of agent interaction, enabling the authors to build a taxonomy of multi-agent failure modes and revise the frameworks to address general categories of weakness.

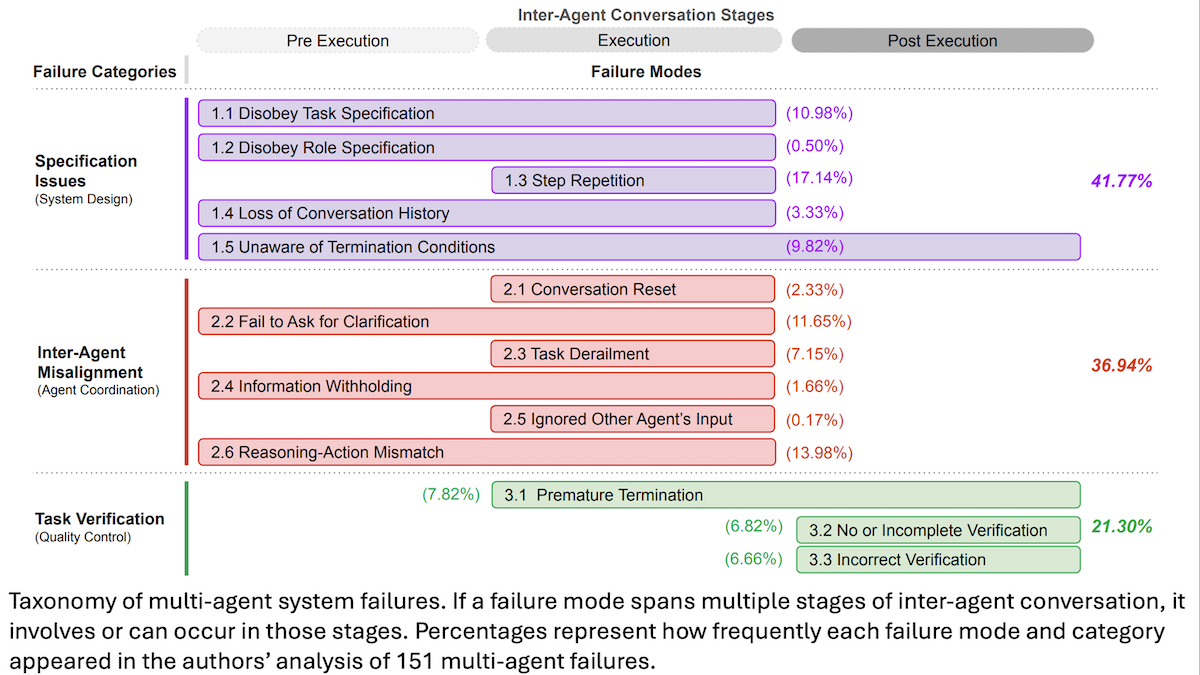

- The authors divided multi-agent system failures into three categories: poor specifications (including 5 subcategories such as agents losing track of their assigned roles and losing conversation history), inter-agent misalignment (6 subcategories that describe failures in coordination and communication such as withholding information or failing to ask for clarification), and poor task verification (3 subcategories such as ending a task without making sure the goal was achieved).

- The authors modified AG2 and ChatDev. They improved prompts (for instance, adding a verification section that read, “Before presenting your final answer, please complete the following steps: …”) and redesigned the multi-agent structure (for example, reconfiguring agents’ roles from the duo of student and assistant to the trio of problem solver, coder, and verifier).

Results: The authors tested versions of AG2 and ChatDev with and without their improvements. They used AG2 to solve math tasks in the GSM-Plus benchmark and ChatDev to solve programming tasks in HumanEval.

- With improved prompts, AG2 achieved 89 percent accuracy. With improved structure, it achieved 88.8 percent accuracy. Without improvements, it achieved 84.3 percent accuracy.

- ChatDev achieved 90.3 percent with better prompts and 91.5 percent accuracy with improved structure. It achieved 89.6 percent accuracy without improvements.

Why it matters: Designing robust multi-agent systems requires more than good LLMs. It demands understanding how agents interact and where their interactions can go wrong. The authors’ taxonomy points toward systemic ways to diagnose and address failures, guiding developers toward multi-agent systems that prioritize collaboration over individual agents.

We’re thinking: By design, the author’s taxonomy doesn’t include a category for inefficient actions. For instance, one multi-agent system made 10 separate tool calls to retrieve 10 songs from Spotify, rather than retrieving all 10 songs at once. It’s a good bet that multi-agent systems will continue to improve.