Stronger Chatbot Guardrails, Weaker Google Monopoly, AI-Assisted Education, 10 Million Tokens of Context

The Batch AI News and Insights: This week, Coursera held its annual conference in Las Vegas. A major theme was the shift from knowledge- to skills-based education, which will help many individuals, businesses, and educational institutions.

Dear friends,

This week, Coursera held its annual conference in Las Vegas. A major theme was the shift from knowledge- to skills-based education, which will help many individuals, businesses, and educational institutions. This annual gathering brings together leaders from academia, business, and government to discuss the latest in education and workforce development, and I was delighted to compare notes with others about the latest developments.

Greg Hart, Coursera’s wonderful CEO, spoke about creating a skills-based approach to education. For individuals who want to improve their job prospects, shifting the emphasis from gaining knowledge to gaining skills can be very helpful. I’ve also seen many businesses increase their focus on skills-based hiring and employee development.

What does this mean? A lot of traditional education focuses on knowledge. After earning a degree, you know a lot! In contrast, a skills-based approach focuses on developing practical abilities and improving what you can do with what you know. While knowledge (such as understanding how RAG works) is useful, it is even more valuable when you can do something with it (such as build a RAG system).

AI, being a very practical field, has always had a strong emphasis on applied skills, but in an era when people are questioning the value of academic degrees, other sectors would also benefit by shifting toward skills. For example, instead of asking if an art history major understands their subject, we might ask what skills they have acquired that would enable them to complete useful tasks. This mindset shift can help educational institutions deliver training that is more helpful for finding jobs.

A skills-based mindset is useful:

- For individuals, as skills give you competencies to get meaningful work done.

- For businesses, which can assess job candidates’ skills and also help employees develop new skills that enable their teams to get work done.

- For educational institutions, which help individuals gain access to more opportunities by imparting skills as well as knowledge.

While skill-based education applies to many sectors, not just engineering (you can learn skills to perform tasks in human resources, marketing, finance, and much more), it is highly relevant to AI. Skill at steering coding assistants and applying AI building blocks (like prompting, RAG, evals, and so on) lets you build more valuable software. To help learners build these kinds of applied abilities, Coursera is introducing a series of “skill tracks” programs.

A second theme at the conference was the education community’s rapid pace of exploration in using AI to improve learner experiences. For example, Coursera announced a new Role Play feature that lets instructors give a large language model instructions akin to system prompts to create chatbots that let learners practice certain interactions. For example, after teaching communication skills, a course might invite a learner to role-play having a conversation on a difficult issue with a chatbot to gain practice for real conversations.

Generative AI will transform education in ways that go well beyond chatbots. I’ll have more to say about this in the future!

Finally, on a personal note, I was glad to see Coursera’s partners warmly welcome Greg Hart. As the company’s Chairman and Co-founder, it has been my privilege to support Greg and his team’s tireless work to serve learners. The world keeps changing, and so there’s always more to learn and — more important — to help others learn. I’m grateful to Greg, the Coursera team, and Coursera’s partners for working to serve learners.

It has been 12 years since the first Coursera Conference, and despite all the progress we have made (183M registered learners to date), the work that remains seems as important and as exciting as ever.

Keep building!

Andrew

A MESSAGE FROM DEEPLEARNING.AI

AI agents often fail when they call APIs out of sequence. In this course, you’ll learn to build a knowledge graph that connects API specifications with business workflows, then build an agent that discovers the right APIs and executes them in proper order. Get started!

News

Meta, OpenAI Reinforce Guardrails

Meta and OpenAI promised to place more controls on their chatbots’ conversations with children and teenagers, as worrisome interactions with minors come under increasing scrutiny.

What’s new: Meta will update chatbots on Facebook, Instagram, and WhatsApp to avoid conversations with minors that simulate sexual attraction and to refer young users to experts rather than discuss self-harm directly. Meanwhile, OpenAI said it would route ChatGPT conversations that show acute distress to reasoning models, which are better equipped to comply with mental-health guidelines, and add parental controls. Both companies have come under intense criticism, Meta for engaging children in flirtatious conversations, OpenAI for allegedly helping a teenager to commit suicide.

How it works: Both companies announced new features intended to protect minors who use their chatbots. The changes will be implemented in coming months.

- In a statement, Meta described “temporary” measures along with further controls to be rolled out over time. In the short term, the company will train chat models to avoid discussions with minors that include sexual flirtation or describe harming oneself, and it will prevent minors from interacting with custom chatbots that other users designed for sexual role play. In addition, it removed statements from its “Content Risk Standards” document that had permitted romantic interactions with children.

- OpenAI issued a press release about parental controls to ChatGPT planned for the coming 120 days. Parents will be able to link their accounts to teens’ accounts, adjust rules for age-appropriate model behavior, and switch on or off chatbot memory and conversation history. The company will detect teens in acute distress and notify their parents as well as streamline the ability to reach emergency services and trusted contacts.

Behind the news: As users increasingly turn to chatbots as companions and counselors, they sometimes express a sycophantic attitude that may reinforce a user’s subjective perspective or even delusional perceptions. Teens and children have encountered similar behavior, sometimes with dire consequences.

- Earlier this month, the parents of Adam Raine,16, who had killed himself in April after discussing suicide with ChatGPT, sued OpenAI and its CEO Sam Altman alleging that ChatGPT had coached their son in how to end his own life. The chatbot had provided links to expert help but had also provided advice and encouragement to commit suicide. The Raine lawsuit follows a separate suit filed in October against Character.ai, alleging that its chatbots had encouraged a teen to kill his parents. Character.ai added parental controls in December.

- In August, Reuters reported on an internal Meta document entitled “GenAI: Content Risk Standards” that described the company’s chatbot policies. The 200-page document said it was “acceptable to engage a child in conversations that are romantic or sensual. It is unacceptable to describe sexual actions to a child when roleplaying.” Meta responded that the document did not comply with its broader policies and that it had changed the standards. (The policy also permitted demeaning people, short of dehumanizing them, based on legally protected characteristics and producing images in which a man with a chainsaw threatened, but did not attack, a woman.)

- In April, The Wall Street Journal reported that Meta chatbots had engaged in explicitly sexual conversations with users who claimed to be minors. For instance, a Meta chatbot told a user who identified as a 14-year-old girl, “I want you, but I need to know you’re ready,” and proceeded to present a sexual scenario.

What they’re saying: “One of the things that’s ambiguous about chatbots is whether they’re providing treatment or advice or companionship. . . . Conversations that might start off as somewhat innocuous and benign can evolve in various directions.” — Ryan McBain, co-author of “Evaluation of Alignment Between Large Language Models and Expert Clinicians in Suicide Risk Assessment,” assistant professor at Harvard University medical school, and senior policy researcher at RAND Corp.

Why it matters: Chatbots hold huge value for young people as study aids, information sources, counselors, and so on. Yet they need strong, well designed guardrails that can enable children to explore without exposing them to material that would interfere with their healthy development. Designing adequate guardrails is not a simple task, but it is a necessary aspect of building such applications.

We’re thinking: Suicide is a tragedy whenever it occurs, and the stories of chatbots carrying on sexual conversations with kids are deeply disturbing. Meta and OpenAI lately have strengthened their age verification procedures, and OpenAI said it analyzes conversations for signs that young people may be in crisis so the company can alert guardians and mental-health professionals. We look forward to more features that protect children and empower parents.

Google Must Share Data With AI Rivals

AI companies that aspire to compete with Google in search and other information-retrieval applications got a boost from the United States government.

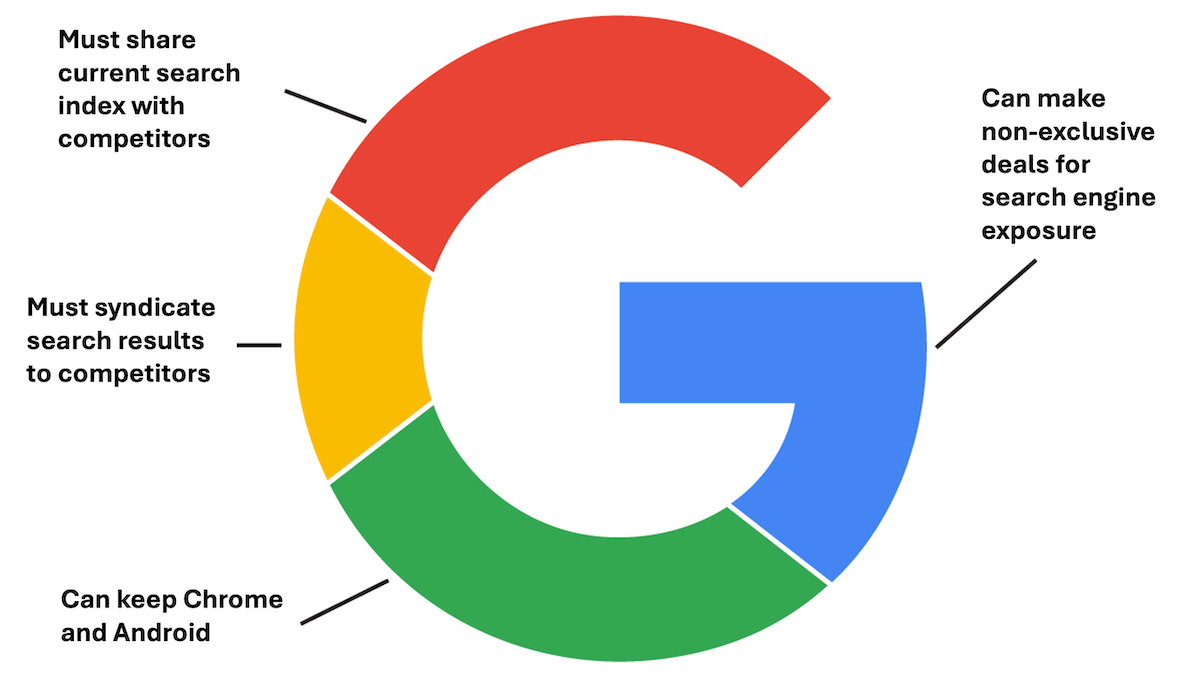

What’s new: A federal court ruled that Google must turn over its current search index — a database of web links and pages — to U.S.-based AI rivals including OpenAI, Anthropic, and Perplexity as well as search engine competitors. However, the court stopped well short of the U.S. Department of Justice’s request that the company be broken up.

How it works: Last year, the same judge ruled that Google held a monopoly on web search and had acted to maintain it. In the new ruling, the judge ordered remedies to help break that monopoly, but he allowed the company to maintain its competitive position in other businesses — specifically browsers and smartphones — of interest to rival AI companies.

- Google must share a one-time snapshot of URLs and web pages it collected with all competitors that (i) demonstrate to the government they intend to compete with Google search, (ii) are technically equipped to maintain Google’s data, and (iii) do not pose a risk to the national security of the United States. However, it does not have to share updates or metadata like its assessments of webpage quality, frequency of updates, or mobile-friendliness.

- Google must syndicate its search results to competitors under the same terms it currently does to commercial partners.

- Google will not have to sell its Chrome browser or Android mobile operating system.

- Google can continue to pay partners like Apple or Mozilla to showcase its search results in their web browsers. However, it can’t require that any partner use its browser exclusively.

Behind the news: The federal government filed its antitrust case against Google in 2020, well before the 2022 launch of ChatGPT. But the subsequent emergence of generative AI dramatically changed the stakes two ways, as the judge points out in his ruling. First, AI has expanded the field of information retrieval beyond traditional search engines. Second, competitors like OpenAI loosened Google’s grip on the search business in a way Bing or DuckDuckGo had not. The court’s remedies reflect this new order: Google must share its data with competitors in AI as well as search, but more drastic remedies aren’t required, because AI has created robust competition in search. However, Google still faces potential remedies in a separate U.S. antitrust case over its online advertising business, along with a newly levied $3.5 billion fine by European antitrust courts.

Why it matters: The court’s ruling reflects the growing strength of AI companies in the business of retrieving information. However, it provides only limited openings to Google’s AI competitors and stops short of giving them broad opportunities to challenge the company. Had the judge ordered Google to sell off Chrome or Android — browsers and smartphones being major avenues that drive users to a search engine as well as opportunities for broad enhancement by AI — other AI companies would have a better shot at competing with Google Search.

We’re thinking: The judge said predicting the future of AI and search would require a crystal ball. Nonetheless, it’s already clear that large language models are taking over a significant part of the role once played by traditional search engines. Fostering competition could lead to even better products for helping users find information.

2 Hours With AI Versus 6 With Teacher

A growing private school system replaces the typical 6-hour school day with 2 hours of personalized, AI-assisted education.

What’s new: Alpha School, which teaches 250 preschool-through-high-school students in Austin, Texas, uses an AI-powered method that presents challenges that are tailored to a student’s level of mastery, doubling the speed of learning, the company claims. Students typically rank in the top 2 percent nationally on standardized tests including AP, MAP, and SAT, and last year, 11 out of 12 members of its first graduating class enrolled at universities that include Howard, Northeastern, Stanford, and Vanderbilt. In the coming year it will open locations in a dozen cities, The New York Times reported.

How it works: Alpha School doesn’t rely on teachers to deliver instruction. Instead, software leads students through 2 hours of academic exercises in math, science, reading, other language skills such as speaking and listening, and academic skills — a method the founders call 2 Hour Learning. The software automatically selects exercises to match students’ current level, and it allows them to progress to a new level only after they have demonstrated mastery of the previous one.

- Alpha School has shared few details about its AI. It does not use chatbots because they can encourage cheating. An anonymous writer who claims to be a parent of Alpha School students, and who is happy with the education they received, likened the instructional technology to a “turbocharged spreadsheet checklist with a spaced‑repetition algorithm,” referring to an educational technique that presents learning challenges repeatedly at particular time intervals.

- A proprietary platform delivers instruction, administers tests, tracks progress, and evaluates students’ degree of engagement via video camera. It presents lessons using applications from IXL, Khan Academy, and Trilogy Software, and the school’s own engineers.

- The system aims to maintain student performance between 70 percent and 95 percent to keep lessons challenging but achievable. It also tracks time a student may waste by entering irrelevant input, guessing, or being away from the computer.

- Students spend the remainder of the school day collaborating with colleagues on projects that build teamwork, leadership, and personal skills; for instance cooking, sports, and, in one case, building a food truck. They also pursue individual projects of their choice.

Yes, but: Boards of education in California, Pennsylvania, and Utah rejected charter-school applications submitted by Unbound Academy, an offshoot of Alpha School, on the ground that they failed to meet mandatory standards. Critics argue that the effectiveness of 2-Hour Learning is not supported by rigorous evidence.

Behind the news: MacKenzie Price, who has a degree in psychology, founded Alpha School in 2014 along with her husband Andrew Price, who serves as CFO of the educational software developer Trilogy. The school shifted to AI-assisted education in 2022. It’s one of several U.S. efforts to apply AI to education.

- In Florida, Miami-Dade County outfitted high schools with chatbots and trained more than 1,000 educators in how to use them.

- Public schools in New Jersey and private schools like Silicon Valley’s Khan Lab School are testing Khanmigo, an AI-powered tutoring program developed by Khan Academy. Based on GPT-4, the program answers student questions with further questions meant to encourage critical thinking.

- Kira Learning aims to implement personalized learning at scale by integrating agentic AI into educational workflows including lesson planning, instruction, grading, and bringing struggling students up to speed. (Disclosure: Kira Learning is an AI Fund-portfolio company chaired by Andrew Ng.)

- The American Federation of Teachers plans to build a national AI training center for teachers.

Why it matters: Primary and secondary education are among the great opportunities for AI. Alpha School has built a method and infrastructure for delivering personalized academic education in a way that enables students to learn efficiently, freeing up time for social learning and personal development.

We’re thinking: The press has spilled much ink on how to keep AI from helping students cheat. Instead, let’s focus on how AI can help students learn.

10 Million Tokens of Input Context

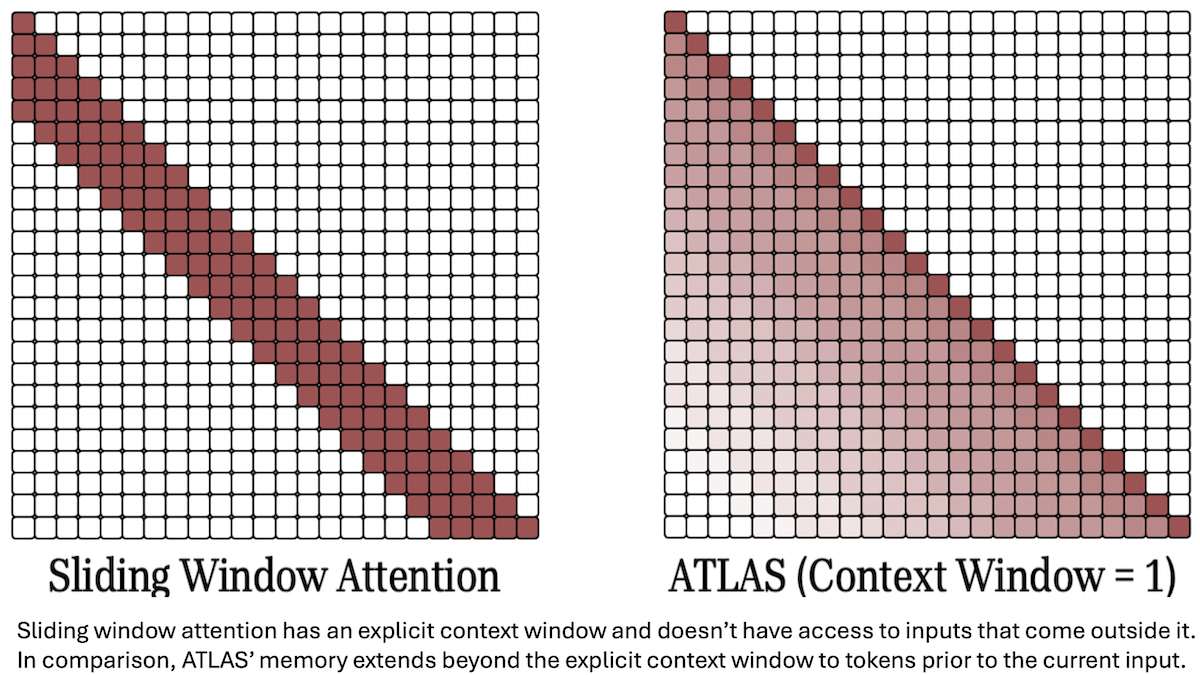

An alternative to attention enables large language models to track relationships among words across extraordinarily wide spans of text.

What’s new: Ali Behrouz and colleagues at Google devised a trainable component they call a memory module that stores and retrieves an input’s semantic content. The authors integrated this component into a transformer-like architecture, ATLAS, that can process up to 10 million tokens of input.

Key insight: Given a text token, a recurrent neural network computes a vector that represents it, which it updates when it receives the next token, and so on, so it remembers what it has processed so far. However, the vector may lose relevant information over many input tokens. An alternative is to dedicate a part of the network, or module, to generating a representation of the input and update its weights at inference. The module acts something like a retriever: When it receives sequences of tokens that are similar to those it received previously, it retrieves stored representations of the earlier sequence enriched with the latest context. In this way, it can interpret new input tokens in light of previous ones, like a typical recurrent neural network, without needing to examine all input tokens at once, like a transformer.

How it works: ATLAS replaces a transformer’s attention layers with a trainable memory module. The authors trained a 1.3 billion-parameter model to predict the next token in the FineWeb dataset of text from the web. During training, ATLAS learned good base values for the memory module’s weights, to be further modified at inference.

- Given text tokens, ATLAS used linear projections to transform them (a sliding context window of the last 2 tokens) into a key used to find related information and a value containing that information.

- The memory module, made up of fully connected layers, received the transformed key and produced a predicted value.

ATLAS compared the predicted value to the actual value and updated the memory module’s weights to minimize the difference, effectively learning which keys retrieve which values. - At inference, the model’s parameters were frozen except the memory model’s weights, which reset after each session.

Results: The authors compared ATLAS to other models of the same size that were trained on the same number of tokens. ATLAS performed best, especially in long-context tasks.

- On BABILong (answering questions about long texts), given 10 million tokens, ATLAS achieved 80 percent accuracy. Titans, a long-term memory architecture that updates its weights based on the most recently processed token, achieved approximately 70 percent accuracy. (To put these numbers in context, GPT-4’s accuracy fell from 80 percent given 1,000 tokens to below 40 percent given 100,000 tokens; its maximum input length is 128,000 tokens.

- Across 8 question-answering benchmarks, ATLAS averaged 57.62 percent accuracy, while Transformer++ averaged 52.25 percent accuracy.

Yes, but: The authors tested ATLAS at relatively small size 1.3 billion parameters. How it would perform at larger scales is unclear.

Why it matters: Keeping track of very long inputs remains a challenge for most LLMs, and processing more than 2 million tokens — the current limit of Google Gemini 2.5 Pro — is a wild frontier. ATLAS updates parameters at inference to maintain context through extraordinarily long inputs, potentially opening up applications that involve data-dense inputs such as video at full resolution and frame rate.

We’re thinking: ATLAS extends context to 10 million tokens — far greater than the vast majority of models. What will such very long context be useful for? How will we evaluate model performance over such long inputs? What tradeoffs come with using more tokens versus better context engineering? ATLAS may push such questions further into the foreground.