How The U.S. “One Big Beautiful Bill” Will Shape AI Regulation: State-by-state regulation of AI will create a shifting patchwork of laws that are likely to hamper innovation even as they provide lesser benefits.

Last week, the United States Congress passed President Trump’s “Big Beautiful Bill.” I’m disappointed it didn’t include a proposed moratorium on U.S. state-level AI regulation.

Dear friends,

Last week, the United States Congress passed President Trump’s “Big Beautiful Bill.” I’m disappointed it didn’t include a proposed moratorium on U.S. state-level AI regulation. While there is a role for AI regulation, it is when the technology is new and poorly understood that lobbyists are most likely to succeed at pushing through anti-competitive regulations that hamper open-source and other beneficial AI efforts. A moratorium would have bought more time for regulators to figure out the realistic risks and rewards of AI and thereby avoid bad regulatory proposals.

Many jurisdictions loosely follow this trajectory:

- When new AI technology is still poorly understood, companies can make grandiose statements about its benefits or dangers, and both traditional and social media are ineffective at fact-checking them and tend to parrot what they say. During this initial period, businesses can get away with saying almost anything.

- This opens opportunities for hype as well as fear mongering based on exaggerated claims about AI dangers. Some businesses exploit this opportunity to try to get regulators to pass anti-competitive laws that impede open-source and other competitors.

- But eventually, smart regulators learn enough about AI to understand its realistic benefits and risks. For example, the U.S. Senate’s bipartisan Insight Forum on AI, which I participated in, heard from many stakeholders and came to support innovation and dismiss ill-founded fears of “AI takeover” and the like.

Indeed, the European Union went through this trajectory as well. After the AI Act was passed, many regulators realized many of its “protections” are not actually helpful. They relaxed some of the law’s provisions to make it less stifling of innovation than many observers initially had feared.

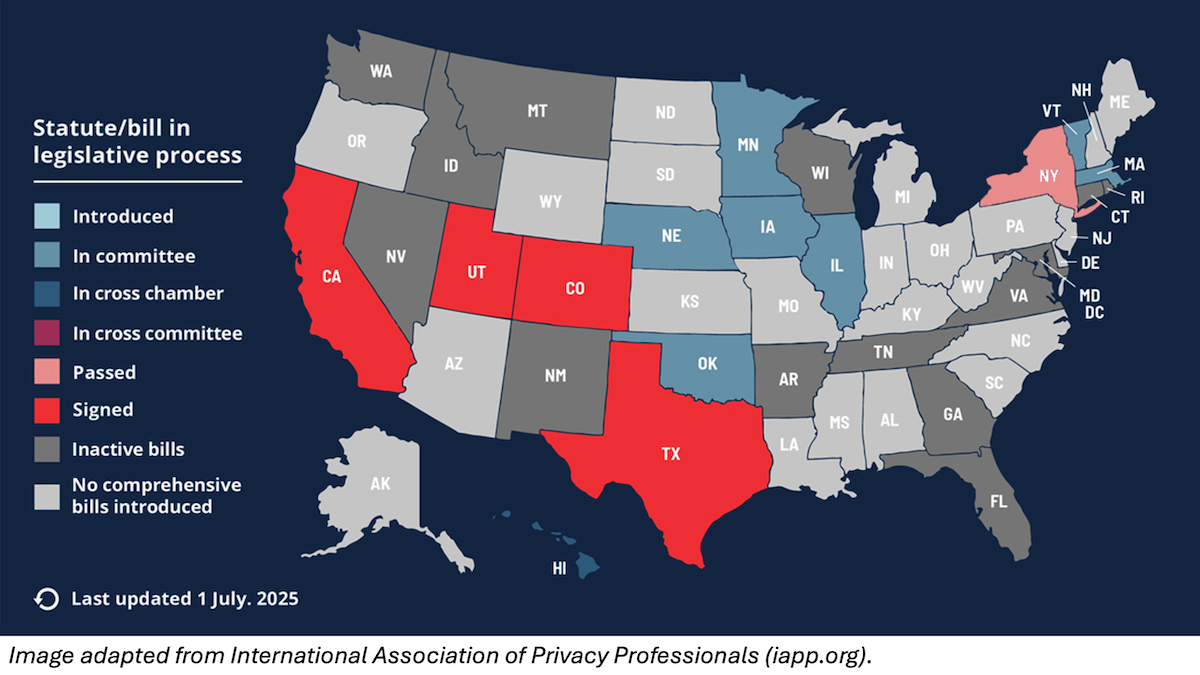

There are AI regulations that would limit harmful applications appropriately, for example, banning non-consensual deepfake porn and preventing misleading marketing. However, many states, which have less resources than the federal government to deeply understand AI, have proposed harmful regulations, especially those that aim to regulate the technology rather than the applications.

For example:

- California’s SB 1047 purported to impose safety requirements on frontier AI systems, but it placed ambiguous and/or technically infeasible requirements on model creators to prevent harmful downstream uses. This is akin to holding the maker of a hammer liable if someone uses it for harmful purposes. Fortunately, Governor Gavin Newsom quashed SB 1047 with a veto.

- New York’s Responsible AI Safety and Education Act, which passed the state legislature in June and awaits Governor Kathy Hochul’s signature or veto, also places ambiguous and unreasonable requirements on model builders, purportedly to guard against theoretical “critical harms.” It would hamper open source without making anyone meaningfully safer.

- The Texas Responsible AI Governance Act initially included many of the problematic elements of SB 1047. It would have created unreasonable requirements that model providers would have had a hard time complying with, and compliance would have amounted to safety theater that was unlikely to actually make people safer. Fortunately, as Texas regulators came to understand AI better, they significantly scaled back the law, and Governor Greg Abbott signed it into law in late June. The final law focuses on specific application areas, establishes an advisory council and regulatory sandbox, and places more burden on government agencies than private companies.

Sadly, I see the net impact of the regulations proposed so far as negative. Many would severely hamper innovation despite some lesser positive benefits. This is why a moratorium on state-level regulation would have been a net benefit to AI and to society. Shutting down bad regulations for a limited period would have given regulators time to figure out AI technology and ignore irresponsible fear mongering. In addition, it would have helped them avoid creating a patchwork of state-level regulations that businesses large and small have a hard time complying with.

Perhaps a 10-year blanket moratorium was a step too far. A more modest, say, 2-year moratorium, and one that covered only the most problematic regulatory proposals, might have had a better chance of passing.

Even though a moratorium did not make it into Trump’s bill, I hope that efforts continue in the U.S. and other nations to give regulators time to understand the real risks and benefits of AI, and not pass stifling regulations during that initial period when the technology is new and the power of fear mongering is strongest.

Keep building!

Andrew