Agentic System for Harder Problems: Google’s AlphaEvolve uses LLMs and evolutionary code to solve complex math and speed up Gemini training

LLMs can struggle with difficult algorithmic or scientific challenges when asked to solve them in a single attempt. An agentic workflow improved one-shot performance on hard problems both theoretical and practical.

LLMs can struggle with difficult algorithmic or scientific challenges when asked to solve them in a single attempt. An agentic workflow improved one-shot performance on hard problems both theoretical and practical.

What’s new: Alexander Novikov, Ngân Vũ, Marvin Eisenberger, and colleagues at Google built AlphaEvolve, an agentic system that used LLMs to generate code in an evolutionary process. AlphaEvolve solved longstanding math problems and helped to reduce the training time for one of Google’s Gemini large language models.

Key insight: When we’re using an LLM to solve a difficult problem, it’s often more effective to start with a working version and gradually improve it than to generate a solution in one shot. By making small, targeted modifications and keeping only those that perform best under automated evaluation, this iterative process can solve problems that LLMs often can’t solve directly. Google used this idea in its earlier FunSearch, which used an LLM to evolve individual Python functions. This approach has become more powerful as LLMs have improved, and today it can benefit more difficult problems.

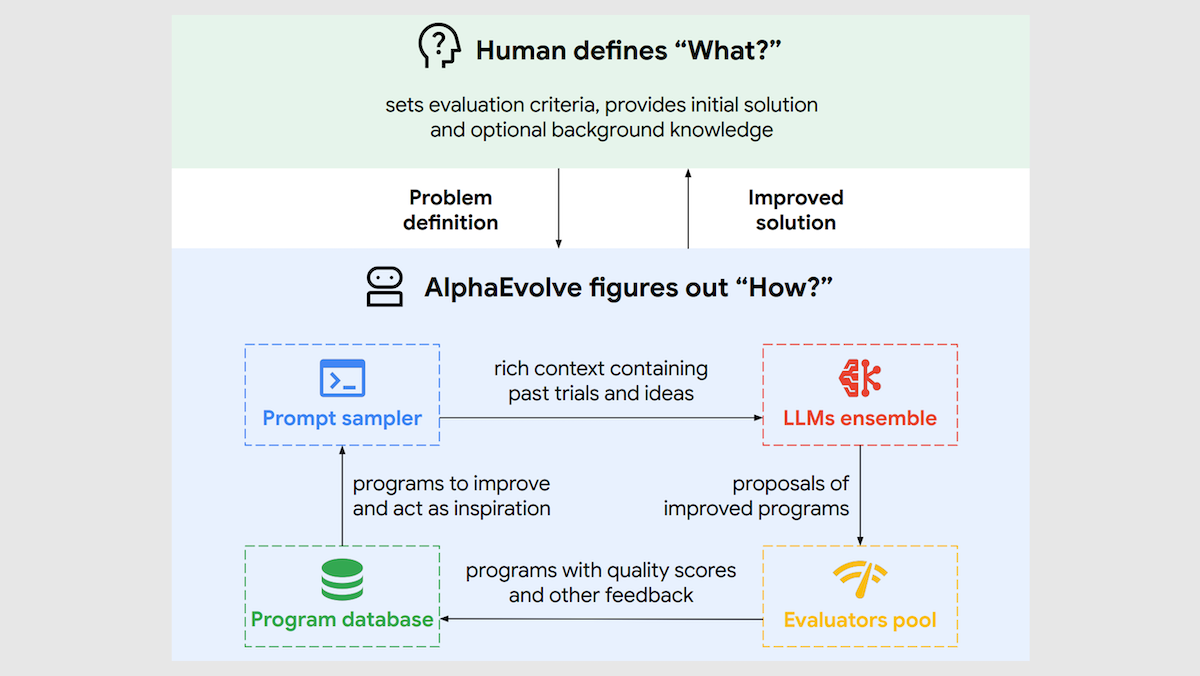

How it works: AlphaEvolve implemented an evolutionary loop: Given initial code and evaluation code, Gemini 2.0 Flash and Gemini 2.0 Pro suggested changes, stored the revised program in a database, evaluated it, suggested further changes, and repeated the process.

- The initial code was required to run but it could be minimal, a skeleton with placeholder logic like functions that return constants (such as “def custom_sort(list): return 2”), which primed AlphaEvolve to find a custom sorting function). Special tags indicated which parts AlphaEvolve could improve (for example, “return 2” only).

- The evaluation code could use the usual Python “sorted” function to check for correctness (for instance, “def evaluate(): return custom_sort(lst) == sorted(lst)”).

- AlphaEvolve prompted Gemini 2.0 Flash and Pro to improve the code; for example, “Act as an expert software developer. Your task is to iteratively improve the provided codebase. [USER PROVIDED CODE]”. Gemini 2.0 Flash generated ideas quickly, while Gemini 2.0 Pro provided slower but higher-quality suggestions. Each LLM proposed small alterations.

- AlphaEvolve ran and scored the altered code using the evaluation code. AlphaEvolve updated a database with the new alterations and their scores.

- The system continued in loop: It sampled high-scoring programs from its database to include in the prompts for the two LLMs, which suggested further alterations. Then it evaluated the altered programs, stored them in the database, and so on. (The authors don’t explain how the loop ends.)

Results: AlphaEvolve achieved breakthroughs in both math and software engineering.

- AlphaEvolve discovered a new algorithm for multiplying 4×4 matrices of complex values that uses 48 multiplications, fewer than [Strassen’s method], the first such progress in 56 years. (Prior work by Google improved Strassen’s method for 4×4 matrices of binary values.)

- The authors used the system to tackle over 50 other math problems. It matched the performance of the best-known algorithms in about 75 percent of cases and surpassed them in 20 percent, for instance the kissing number problem (packing spheres in 11-dimensional space so they all touch the same sphere).

- In software engineering, it optimized key components of Google's infrastructure. (i) It improved Google’s cluster scheduling algorithms, freeing up 0.7 percent of total computing resources that otherwise would be idle. (ii) It also discovered a GPU kernel configuration that accelerated attention by 32 percent. (iii) It found ways to split up the matrices that delivered an average 23 percent speedup for matrix multiplication relative to previous expert-designed heuristics. This reduced Gemini’s training time by 1 percent.

Why it matters: AlphaEvolve proposes thousands of candidate ideas — some bad, some brilliant — to evolve better programs. The authors show that this approach can improve algorithms that have stood for decades as well as computing infrastructure designed by Google engineers. Thus, AlphaEvolve adds to the growing evidence that LLMs can act as collaborators in cutting-edge research, exploring broad problem spaces and finding novel solutions. Other examples include Co-Scientist and SWE-agent.

We’re thinking: Relatively simple evaluations enabled the authors’ agentic evolutionary system to gradually improve. More broadly, evaluations are proving to be important to a wide variety of agentic workflows.