Gemini’s Environmental Impact Measured: Google study directly measures electricity, water use, and greenhouse emissions of its models

Google determined that its large language models have a smaller environmental footprint than previous estimates had led it to expect.

Google determined that its large language models have a smaller environmental footprint than previous estimates had led it to expect.

What’s new: For one year, Google researchers studied the energy consumption, greenhouse gas emissions, and water consumption of the models that drove its Gemini AI assistant in applications like Gmail, Calendar, Drive, Flights, and Maps. (They didn’t identify the specific models involved.) They found that the impact of processing a single prompt was roughly comparable to loading a web page or streaming a brief video to a television screen.

How it works: The authors confined their study to inference in text-processing tasks, calculating the impact of processing a single “median” prompt (one that consumes the median amount of energy across all prompts and models). They considered only activities under Google’s operational control, including data-center construction and hardware manufacturing, but not including internet routing or end-user devices.

- Energy: The authors measured energy used to classify prompts, route them to specific models, and rank potential responses. To accomplish this, they traced the hardware used and measured energy consumption of all hardware components within a server rack, including idle machines, active processors, and cooling systems. TPUs, Google’s custom AI processors, accounted for 58 percent of the total energy consumption.

- Emissions: The authors calculated greenhouse gas emissions by multiplying the energy consumed per median-length prompt by the previous year’s average emissions per unit of electricity plus operational emissions from sources like heating and air conditioning as well as embodied emissions like hardware manufacturing and transportation, and building the data center itself. They estimated operational and embodied emissions using results from this study.

- Water: Water is used to cool data-center hardware, and around 80 percent of it evaporates. The authors measured water input minus water returned in 2023 and 2024. This enabled them to calculate water usage per energy (1.15 liter per kilowatt hour), which they multiplied by the amount of energy used per prompt to calculate the water usage per prompt.

Results: The energy and water consumed and greenhouse gases emitted by Gemini AI assistant’s models fell well below Google’s estimates in previous years. Moreover, between May 2024 and May 2025, given a median prompt, the models’ energy consumption fell by a factor of 33 and their greenhouse gas emissions fell by a factor of 44, reductions attributable to clean-energy procurement and more energy-efficient hardware and software.

- A median text prompt consumed approximately 0.24 watt-hours, around the amount of energy that a television screen consumes over 9 seconds.

- The median prompt consumed 0.26 milliliters of water, about five drops.

- Each median prompt generated about 0.03 grams of greenhouse gases, roughly the amount emitted when loading a single webpage.

Behind the news: Recently, Mistral assessed its Mistral Large 2 model in a similar way (although its study included training). It found that, at inference, 400-token prompt generated 1.14 grams of greenhouse gases and consumed 45 milliliters of water.

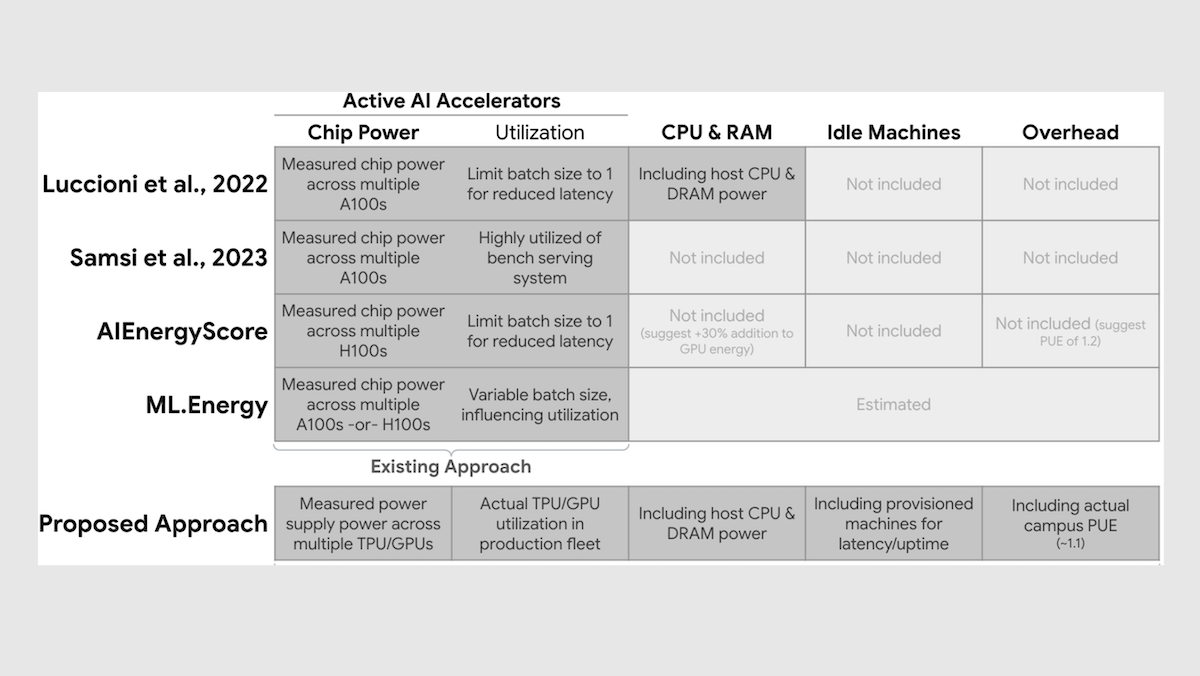

Yes, but: Earlier research arrived at measurements as much as two orders of magnitude higher than Google’s, largely because they included factors that Google did not, The Verge reported. For instance, a 2023 study found that GPT-3 used about 10 milliliters to 50 milliliters of water per (average) prompt — greater than Google’s Gemini findings by 40 to 200 times. That study included water used in generating electricity, such as steam used to turn turbines or water used to cool nuclear generators, which Google omitted. Further, the 2023 study based its estimate of greenhouse gas emissions on actual emissions of local grids, while Google based its measurement on the company’s commitments to buy energy from low-carbon sources. Google did not respond to questions from The Verge.

Why it matters: Assessing the environmental cost of AI has proven to be difficult, and different approaches paint very different pictures. Google’s approach has the benefit of focusing on variables under its control and addressing energy, greenhouse gases, and water. However, it leaves out important contributors to these measures — including training — as well as consumption of materials, as highlighted in Mistral’s assessment.

We’re thinking: The AI industry needs a standard method that would enable AI companies to report their models’ environmental impacts and the public to compare them. Kudos to Google, Mistral, and the independent researchers for proposing practical approaches and continuing to refine them.