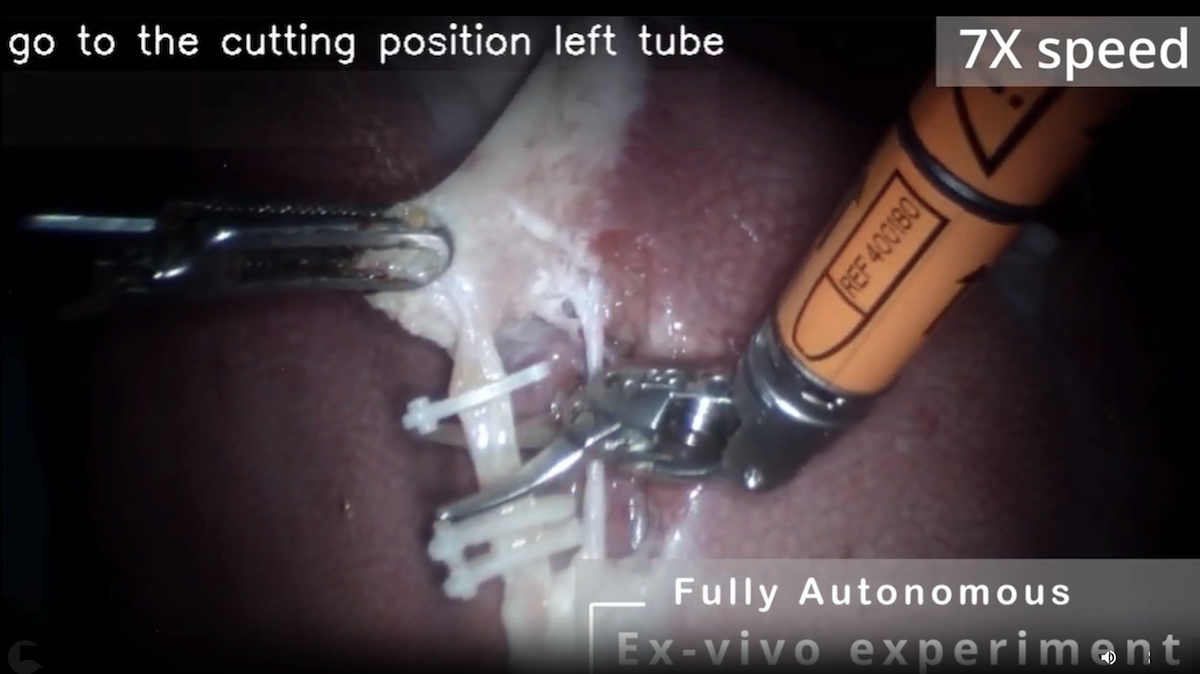

Robot Surgeon Cuts and Clips: Doctors at Stanford, Johns Hopkins, and Optosurgical operate on animal organs without human intervention

An autonomous robot performed intricate surgical operations without human intervention.

An autonomous robot performed intricate surgical operations without human intervention.

What’s new: Ji Woong (Brian) Kim and colleagues at Johns Hopkins, Stanford, and the surgical technology company Optosurgical developed Hierarchical Surgical Robot Transformer (SRT-H), a system that performs surgery with only routine help from humans. The system, which uses a two-armed surgical robot that ordinarily is operated by hand, successfully completed the key clipping-and-cutting steps to remove gallbladders.

How it works: SRT-H pairs two transformer models: a high-level planner that decides what step to take next and a low-level action generator that turns the planner’s decision into signals that control an Intuitive Surgical da Vinci robot. Both models were trained via imitation learning. That is, they learned to map images and text to robot arm motions by copying recorded human demonstrations.

- To build a training dataset, the team recorded around 17 hours of operations in which humans operated the robot, performing 17 steps to remove gallbladders from 34 pig tissues that had been separated from the animals’ bodies. The recordings captured the outputs of a tube-mounted endoscope, two cameras mounted on the robot’s wrists, and the translations, rotations, and gripper openings of each robot arm.

- Annotators labeled each step (such as “grab gallbladder” and “place second clip on left tube”) along with corrective instructions wherever the surgeons revised their actions in progress (for instance, “move right arm to the right”). This process resulted in roughly 16,000 labeled, time-stamped, brief sequences of images with corresponding robotics data and natural-language labels.

- Given the 5 most recent endoscope frames, the high-level transformer learned to predict (i) whether a correction was required (that is, whether the surgeons revised their actions) and, if so, an appropriate natural language instruction for the correction and (ii) an instruction that described the next step in the surgery. A pretrained Swin-T encoded the images, and the transformer’s decoder learned to output the next step, binary correction flag, and corrective instruction.

- Given the high-level transformer’s correction flag, next-step instruction, and corrective instruction as well as images from the endoscope and wrist cameras, the low-level transformer learned to generate around the next 2 seconds of robot motion. A pretrained EfficientNet-B3 encoded the images, a pretrained DistilBERT embedded the next-step instruction, FiLM layers aligned the embedded instruction with relevant image features, aligning the visual representation with the current instruction. The transformer’s decoder learned to generate the next robot action sequence.

- At inference, every 3 seconds, the high-level transformer processed the 5 most recent endoscope frames and issued a correction flag, next-step instruction, and corrective instruction. It used the flag to decide which instruction to pass to the low-level transformer. Then the low-level transformer executed actions in chunks, taking roughly 30 time steps for grabbing and 20 time steps for clipping and cutting. It paused automatically for humans to load new clips or swap between cutter and clip applier tools, a role normally assigned to a surgical nurse.

Results: Tested on 8 pig tissues, SRT-H successfully performed each operation, correcting its own mistakes along the way.

- SRT-H successfully completed all 17 clipping-and-cutting steps on all tissues despite individual variations. When it encountered a problem, it corrected itself and proceeded to complete the operation successfully.

- The high-level transformer correctly predicted next-step instructions with 97 percent accuracy, correction flags with 95 percent accuracy, and corrective instructions (among 18 possible classes of motion) with 70 percent accuracy.

- In a preliminary comparison with an expert surgeon, SRT-H moved the robot less and moved it more smoothly than the surgeon did. However, SRT-H was nearly 41 percent slower. (The authors modified SRT-H’s instruments so they would perform clipping and cutting motions without actually clipping or cutting tissue. This enabled the surgeon to operate on the same tissues as the robot.)

Yes, but: The authors tested SRT-H on tissues that had been removed from an animal’s body. Real-world surgery involves the body as a whole, and surgeons must manage bleeding, tissue motion from respiration, and visual occlusions that might challenge SRT-H.

Behind the news: Prior autonomous surgical systems often rely on custom hardware and setup. For instance, Smart Tissue Autonomous Robot (STAR), which combines model-based planning with a hand-crafted state machine, uses an enhanced endoscope. The instrument integrates near-infrared fluorescence (NIR) and 3D imaging, so the system can be guided by NIR markers on a patient’s tissue and plan sutures on 3D surfaces. By contrast, SRT-H uses the widely deployed da Vinci robot (over 10,000 units in hospitals globally) and learned from RGB video with annotations in natural language — no NIR markers, 3D scanners, or special fixtures.

Why it matters: SRT-H is a significant step toward surgeries that can be performed safely by an autonomous robot. There’s still a long way to go: The system performed only portions of gallbladder removals, and it did so on tissues that were outside the body. Nonetheless, it did its job nearly flawlessly. Its natural language interface makes its decisions interpretable and enables humans to override or correct the system using verbal commands, important steps toward safe autonomous surgeries. And since SRT-H relies on imitation learning, presumably it could learn to perform other procedures, given appropriate demonstrations.

We’re thinking: In an operating room, the ability to recover from unexpected events trumps perfect execution of predetermined plans. SRT-H’s correction system enables the system to recover from its own mistakes — an important advantage over rigid systems that may work well in the lab but struggle under real-world conditions.