California Reframes AI Regulations: California proposes new guidelines to balance AI innovation and regulation

A committee convened by California Governor Gavin Newsom proposed principles intended to balance AI innovation with careful governance. The group sought to rethink AI regulation after Newsom vetoed earlier proposed legislation.

A committee convened by California Governor Gavin Newsom proposed principles intended to balance AI innovation with careful governance. The group sought to rethink AI regulation after Newsom vetoed earlier proposed legislation.

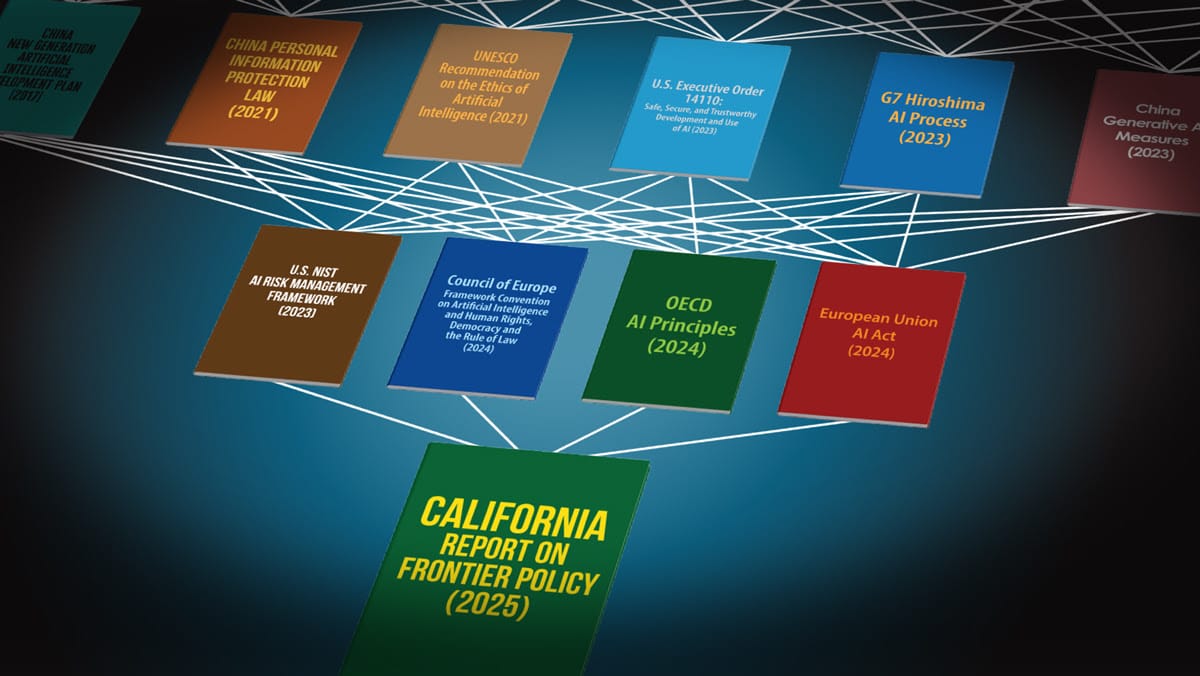

What’s new: The Joint California Policy Working Group on AI Frontier Models published “The California Report on Frontier AI Policy,” which outlines principles for California lawmakers to consider in regulating cutting-edge models. Rishi Bommasani of the Stanford Center for Research on Foundation Models and Scott R. Singer of the Carnegie Endowment for International Peace led the effort.

How it works: The authors assessed the proposals of the vetoed legislation, SB 1047, and the progress of AI in the 9 months since. The group considered feedback from more than 60 experts from a range of disciplines. Their report focuses on regulating frontier models — as opposed to applications — loosely defined as the most capable foundation models. The authors conclude:

- Lawmakers should consider a broad spectrum of evidence, including technical methods, simulations, and historical experience. Drawing on a variety of sources can help prevent particular stakeholders from misrepresenting data, as oil and tobacco interests did in the past.

- Laws should incentivize companies to disclose information that protects the public. AI companies have “not yet coalesced around norms for transparency,” but those that share information can benefit from higher trust by the public and regulators.

- Reporting adverse events should be mandatory, and there should be clear ways to address any resulting risks to prevent minor problems from snowballing into major ones. Moreover, whistleblowers must be protected. These measures are crucial to achieve transparency in critical activities such as acquiring data, enforcing security, and ensuring safety.

- Early choices about the design of technology can lock in future challenges. Thus legislators should anticipate potential future developments and behaviors, rather than waiting for harms to occur. In addition, laws that trigger regulations based on variables like computational budget or numbers of users must be flexible, so they can remain useful even if those variables change rapidly.

- The authors note the need for regulators to address recognized hazards, such as bias and disinformation, as well as potential threats such as AI-enabled biological attacks. They don’t address AI’s impact on labor or energy consumption.

Behind the news: Although the White House has ordered an AI action plan, U.S. states have passed the bulk of regulations. However, this may be changing. Congress is debating legislation that would ban states from enacting their own AI laws for a period of 10 years. The aim is to avoid forcing AI developers to navigate a patchwork of laws state by state, which would risk slowing down U.S. AI development, hampering competition, and discouraging open-source development.

Why it matters: Regulating AI is tricky, particularly given the intense lobbying efforts to pass laws that would favor particular large companies or block competition from open-source software. AI is sparking innovations in a wide range of fields, including agriculture, biotechnology, clean technology, education, finance, and medicine. Fundamental principles like weighing evidence rather than theory, engaging a wide variety of stakeholders, and requiring transparency can help regulators craft laws that enable the public to benefit from technological progress without imposing undue burdens on developers.

We’re thinking: The working group sensibly discarded many of the counterproductive requirements of California’s deeply flawed SB 1047, such as making AI developers liable if their models are used to cause significant damage. However, the new guidelines retain the earlier emphasis on regulating general-purpose technology — foundation models — rather than specific applications. We should regulate the way AI models are used instead of the models themselves.