More Consistent Characters and Styles: Black Forest Labs Launches FLUX.1 Kontext for Generating and Alterating Images with Consistent Details

Same character, new background, new action. That’s the focus of the latest text-to-image models from Germany’s Black Forest Labs.

Same character, new background, new action. That’s the focus of the latest text-to-image models from Germany’s Black Forest Labs.

What’s new: The FLUX.1 Kontext family, which comes in versions dubbed max, pro, and dev, is trained to alter images in controlled ways. The company plans to release the weights for FLUX.1 Kontext dev but has not yet specified the licensing terms.

- Input/output: text, image in; image out

- Architecture: Unspecified text encoders, convolutional neural network image encoder-decoder, transformer. FLUX.1 Kontext dev 12 billion parameters, other parameter counts undisclosed

- Features: Character consistency, local and global alterations

- Availability/price: FLUX.1 Kontext max and FLUX.1 Kontext pro available via FLUX Playground and various partners, $0.08 per image (FLUX.1 max) and $0.04 per image (FLUX.1 pro) via Fal, an image-generation platform.

- Undisclosed: Parameter counts of FLUX.1 Kontext max and FLUX.1 Kontext pro, architecture of text encoders, training data, evaluation protocol, open-weights license

How it works: The FLUX.1 Kontext models include encoders that embed input text and/or images, a transformer that processes them, and an image decoder that generates images. The current technical report doesn’t describe how it trained them for character consistency and image editing.

- The team trained the convolutional neural network encoder-decoder to reproduce images and to fool a discriminator (architecture and training unspecified) into classifying them as real.

- Having frozen the encoders, they trained the transformer — given a time step, embedding of a text prompt, embedding of a reference image, and noisy image embedding — to remove the noise over a series of steps.

- They further trained the transformer to encourage it to produce noise-free embeddings that a second discriminator would classify as representing real images. This process, a variant of adversarial diffusion distillation, helps reduce the number of steps needed to produce a good image embedding.

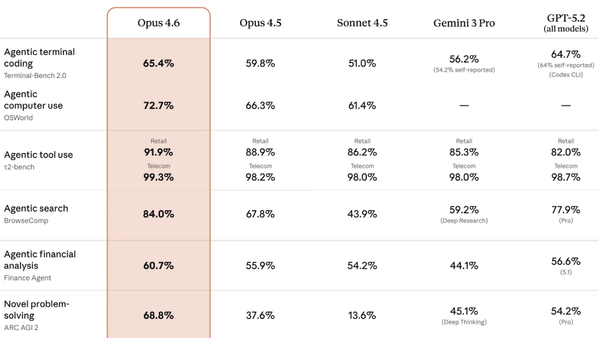

Results: The team compared the output of FLUX.1 Kontext models with that of five competing models including OpenAI GPT Image 1 (at three different quality levels) and Google Gemini 2.0 Flash native image generation. An undisclosed number of people evaluated the models according to a proprietary benchmark that highlights altering local and global aspects of an image, editing generated text within an image, maintaining consistent characters, and generating an image according to a reference style. The dataset included roughly 1,000 crowd-sourced pairs of text prompts and reference images.

- FLUX.1 Kontext max and FLUX.1 Kontext pro outperformed all competing models.

- FLUX.1 dev outperformed all except other family members and GPT Image 1 set to high or medium quality.

Behind the news: Character consistency, also known as personalization, has come a long way since text-to-image generators became popular. In 2022, Textual Inversion showed how to learn an embedding of a character and use that embedding to produce further images. In 2023, DreamBooth showed how to get good results by fine-tuning a model on a few images of the character to be portrayed in a new situation. Since then, image-editing models have improved in quality and generality, including Meta Emu-Edit, OmniGen, and OpenAI gpt-image-1.

Why it matters: Consistency and precise editing enable artists to craft stories around specific characters. Such models have become better at generating consistent details across images, but they remain finicky, sometimes changing minute details or entire characters and backgrounds. The more faithfully they help users express their ideas, the more firmly embedded in the creative toolkit they’ll become.

We’re thinking: Black Forest Labs announced plans to publish its proprietary benchmark. There’s a real need for common benchmarks to evaluate image generation, and we hope other developers will give it due consideration.