Forecasting Multiple Time Series: Amazon’s Chronos-2 sorts out tangled variables to make better predictions

Transformers are well suited to predicting future values of time series like energy prices, wages, or weather, but often — as in those examples — multiple time series often influence one another. Researchers built a model that can forecast multiple time series simultaneously.

Transformers are well suited to predicting future values of time series like energy prices, wages, or weather, but often — as in those examples — multiple time series often influence one another. Researchers built a model that can forecast multiple time series simultaneously.

What’s new: Chronos-2 is a pretrained model that can accept and predict multiple time series in a zero-shot manner to forecast series of a single variable (univariate forecasting), multiple variables (multivariate forecasting), and single variables that depend on other variables (covariate-informed forecasting). Its authors include Abdul Fatir Ansari, Oleksandr Shchur, Jaris Küken, and colleagues at Amazon, University of Freiburg, Johannes Kepler University Linz, Boston College, and Rutgers.

- Input/output: Time series in (up to 8,192 time steps), time series out (up to 1,024 time steps)

- Architecture: Modified transformer, 120 million parameters

- Performance: Lower error on average than 14 competing models

- Availability: Weights available for commercial and noncommercial uses under Apache 2.0 license

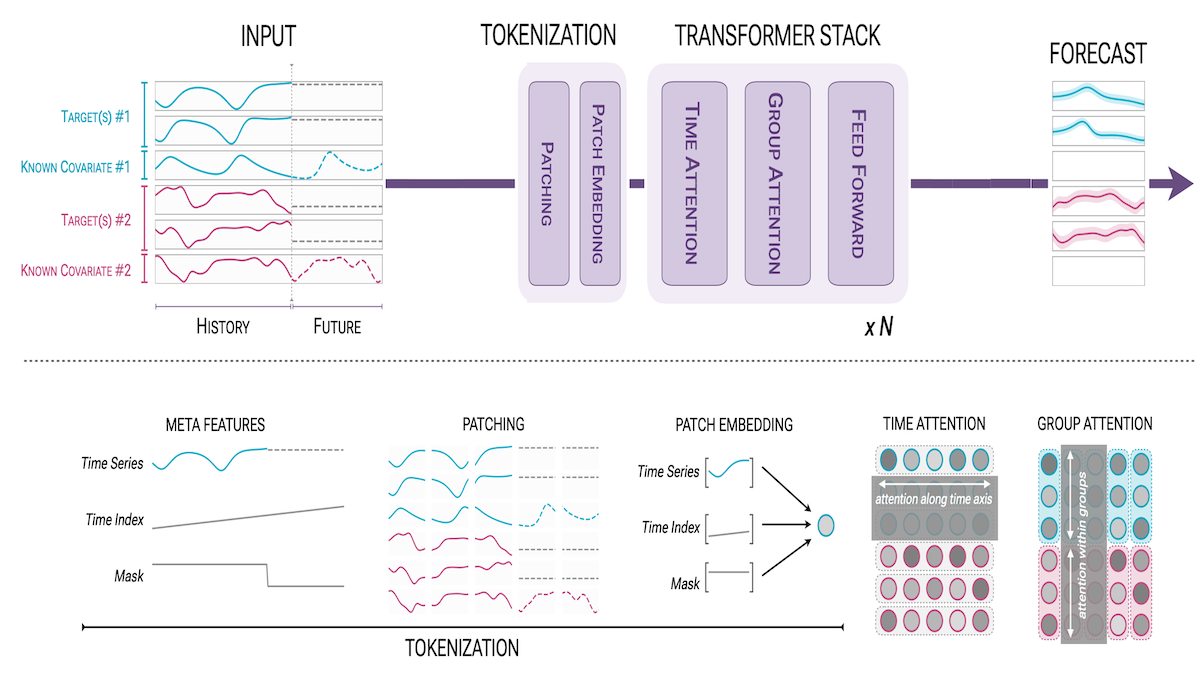

How it works: Given any number of time series, Chronos 2 predicts values at multiple future time steps. Chronos 2 learned to minimize the difference between its predicted future values and ground truth values in subsets of datasets that contain univariate series (including synthetic data generated using methods from earlier work). They supplemented these datasets with synthetic multivariate and covariate data produced using a method devised by the authors: Their method generates multiple independent time series and then produces dependencies between them by applying mathematical transformations at the same time step and across time steps.

- Chronos 2 stacks each input time series to make a series of vectors, where each vector represents one time step. These values can be historical or future values that are known (such as dates of holidays or weather forecasts). For non-overlapping time series (for example, one past and one future), the model aligns the time series by the corresponding time step and adds zeros to either end to equalize the number of time steps.

- Given the series of vectors, the model splits them into non-overlapping patches, and a vanilla neural network with added skip connections, or residual network, turns each patch into an embedding.

- Given the embeddings, it predicts values of each time series for a number of future time steps that haven’t already been assigned a value.

- In addition to the attention layers that perform attention across a given time series, Chronos 2 includes what the authors call group attention layers. These layers process attention across time series, or more specifically, across groups of time series. The user specifies groups, so the model can produce multiple independent forecasts at once.

Results: Across various benchmarks, Chronos 2 outperformed 14 competing zero-shot models according to their skill score, a measure of how much a model reduces the average difference in predicted values relative to a baseline (higher is better, one is a perfect score).

- Across univariate, multivariate, and covariate subsets of fev-bench, Chronos-2 achieved the highest skill score.

- On fev-bench, 100 realistic time-series tasks including single and multiple input and output time series, Chronos-2 (0.473) outperformed TiRex (0.426), which processes only univariate time series, and Toto-1.0 (0.407), which can process multivariate and covariate time series in some cases.

Behind the news: Most previous works, including the previous versions Chronos and Chronos-Bolt, predict only univariate time series. Later models like Toto-1.0 and COSMIC process multiple inputs or outputs in limited ways. For instance, Toto-1.0 processes multiple inputs and outputs, but the multiple inputs can only represent past information, not future or static information. COSMIC, on the other hand, can handle multiple inputs (past or future) but not multiple outputs.

Why it matters: Chronos 2 can handle past, future, and static inputs as well as multiple outputs, giving developers, researchers, and companies alike the ability to better predict complex time series.

We’re thinking: The author’s attention setup is similar to the way many video transformers apply attention separately across space and time. It saves memory compared to performing attention across both at once, and remains an effective method for understanding data across both.