Alex, Open . . . Something

If digital assistants had feet, their Achilles heel would be requiring users to remember commands that invoke new skills. Amazon proposes a way to train systems like Alexa to learn new domains incrementally.

If digital assistants had feet, their Achilles heel would be requiring users to remember commands that invoke new skills. Amazon proposes a way to train systems like Alexa to learn new domains incrementally, so they can parse intent in a phrase like “call a car” even if the skill itself — say, Uber/Lyft/Grab/etc.— isn’t named.

What’s new: Amazon’s proposal, called Continuous Domain Adaptation or CoNDA, maintains previously learned parameters while updating those relevant to a new domain. This strategy teaches new skills in far less time than retraining a model from scratch. It also avoids the catastrophic forgetting problem, in which the new learning displaces old.

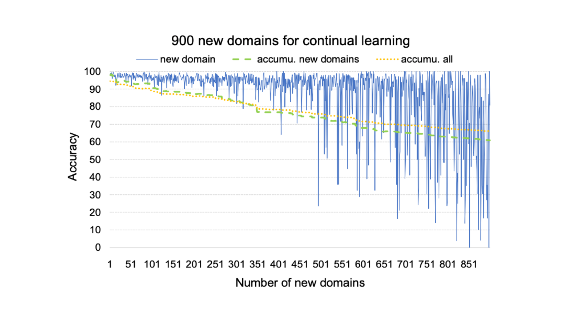

The results: CoNDA achieved 95.6 percent accuracy over 100 new domains and 88.2 percent accuracy for all domains after the new ones have been added. That’s only 3.6 percent lower than if the model had been retrained from scratch.

How it works: Devised by Han Li and his colleagues, CoNDA is a variation on the Shortlister classifier, which comprises three modules. An LSTM-based encoder maps verbal commands to vector representations. A second module generates summarization vectors, freezing parameter weights when a new domain is added and updating only those relevant to new training data. It adds a regularization term to the loss function to avoid overfitting. Finally, a feed-forward network predicts the classification using cosine normalization. A negative sampling procedure maintains known domains to alleviate catastrophic forgetting.

Takeaway: The new training method promises relief to digital assistants struggling to recall dozens of invocations. More important, it opens new doors for continuous learning — an essential capability as AI systems are deployed in ever more dynamic environments.